Introduction

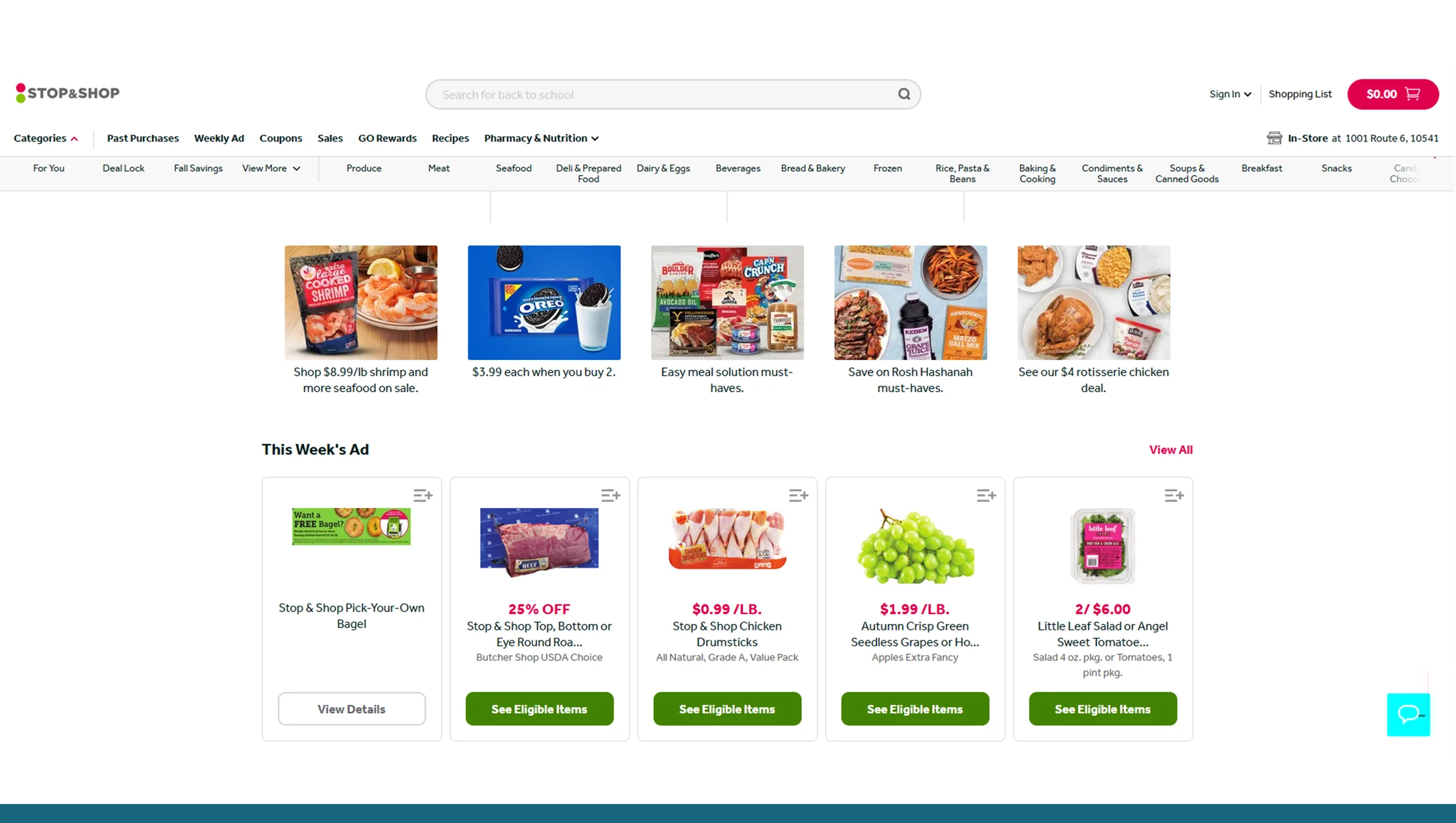

If you need accurate, structured grocery data from Stop & Shop — product titles, SKUs, prices, availability, images, promotions, categories, and more — this guide walks you through everything you need to know about Stop & Shop APIs and scraping solutions: what exists, what to look for, what data you can get, legal and ethical considerations, recommended architecture, typical data schema, and practical examples and use-cases. It’ll also cover implementation patterns, limitations you’ll likely face, and alternatives if you prefer a turnkey Grocery Data Scraping API solution.

Quick Overview of Stop & Shop API & Stop & Shop Scraping API

When people say “Stop & Shop API” they usually mean one of three things:

- An official public API published by Stop & Shop for partners or developers (rare for grocery chains).

- The private/undocumented APIs the Stop & Shop website and mobile apps call behind the scenes — endpoints visible in browser developer tools when you browse the site. These are not public but can be discovered in network traces.

- A third-party Scraping API or managed service that fetches Stop & Shop data for you, normalizes it, and returns it via a stable API interface.

If you’re building product comparison tools, price-monitoring dashboards, order orchestration, or market research, the third option (a scraping API) is the most realistic path when the retailer doesn’t offer an open, public API. Several companies offer Stop & Shop scraping APIs and there are community projects that attempt to interface with Stop & Shop internals.

Why Businesses Need Stop & Shop Grocery Scraping API Data

Stop & Shop is a major northeast U.S. grocery chain with a large online catalog and delivery/pickup services. Data from Stop & Shop is valuable for:

- Competitive pricing & repricing — keep product prices competitive in marketplaces and dynamic pricing engines.

- Market research & assortment analysis — understand product availability, brand presence, and category coverage regionally.

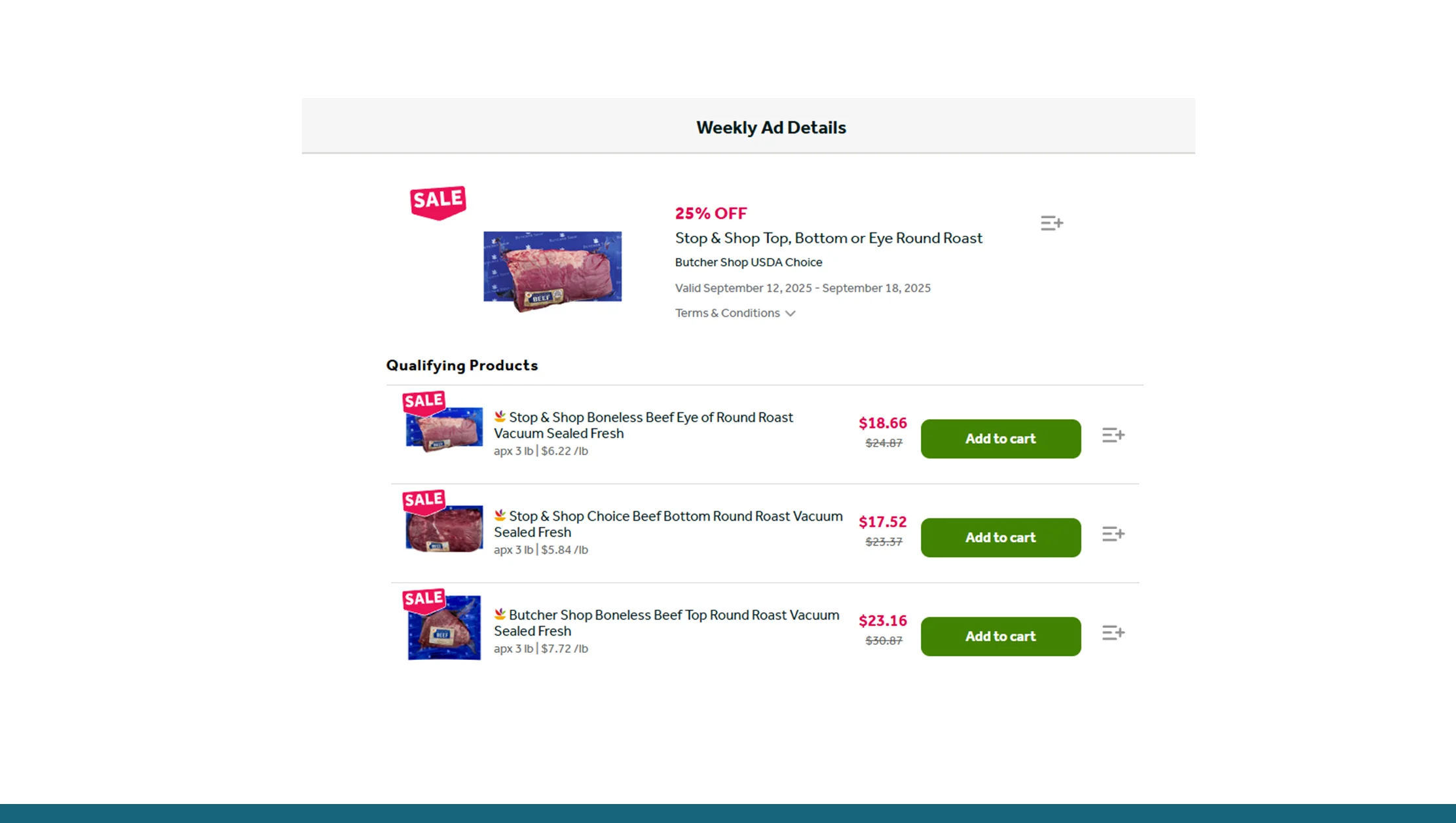

- Promotions monitoring — detect coupons, weekly ad prices, and special promotions.

- E-commerce catalog enrichment — capture images, descriptions, and ingredient lists.

- Inventory & stock tracking — monitor in-stock vs out-of-stock over time for popular SKUs.

- Ads & feed optimization — automatically update product feeds for Google Shopping, marketplaces, etc.

Because grocery data changes rapidly (fresh produce, weekly specials, local inventory), companies often turn to structured Grocery Datasets for near real-time access or frequent polling is often required.

Official Stop & Shop API vs. Stop & Shop Scraping API vs. Partner Feeds

Official / partner APIs

- Best option if available: stable, documented, and legally supported.

- Big chains sometimes offer partner or enterprise APIs for vendors, loyalty partners, or data partners — but these are gated.

Undocumented internal APIs

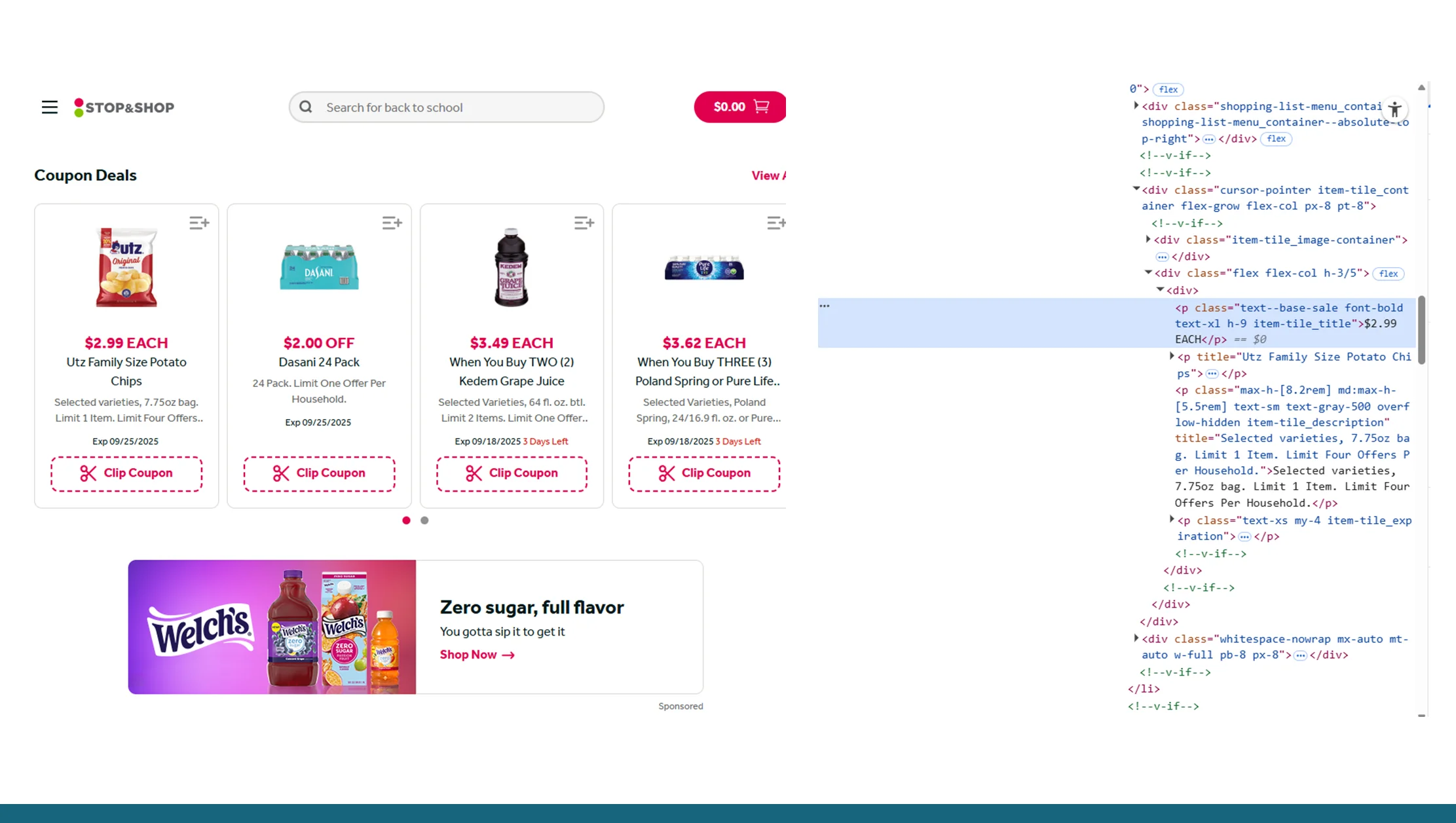

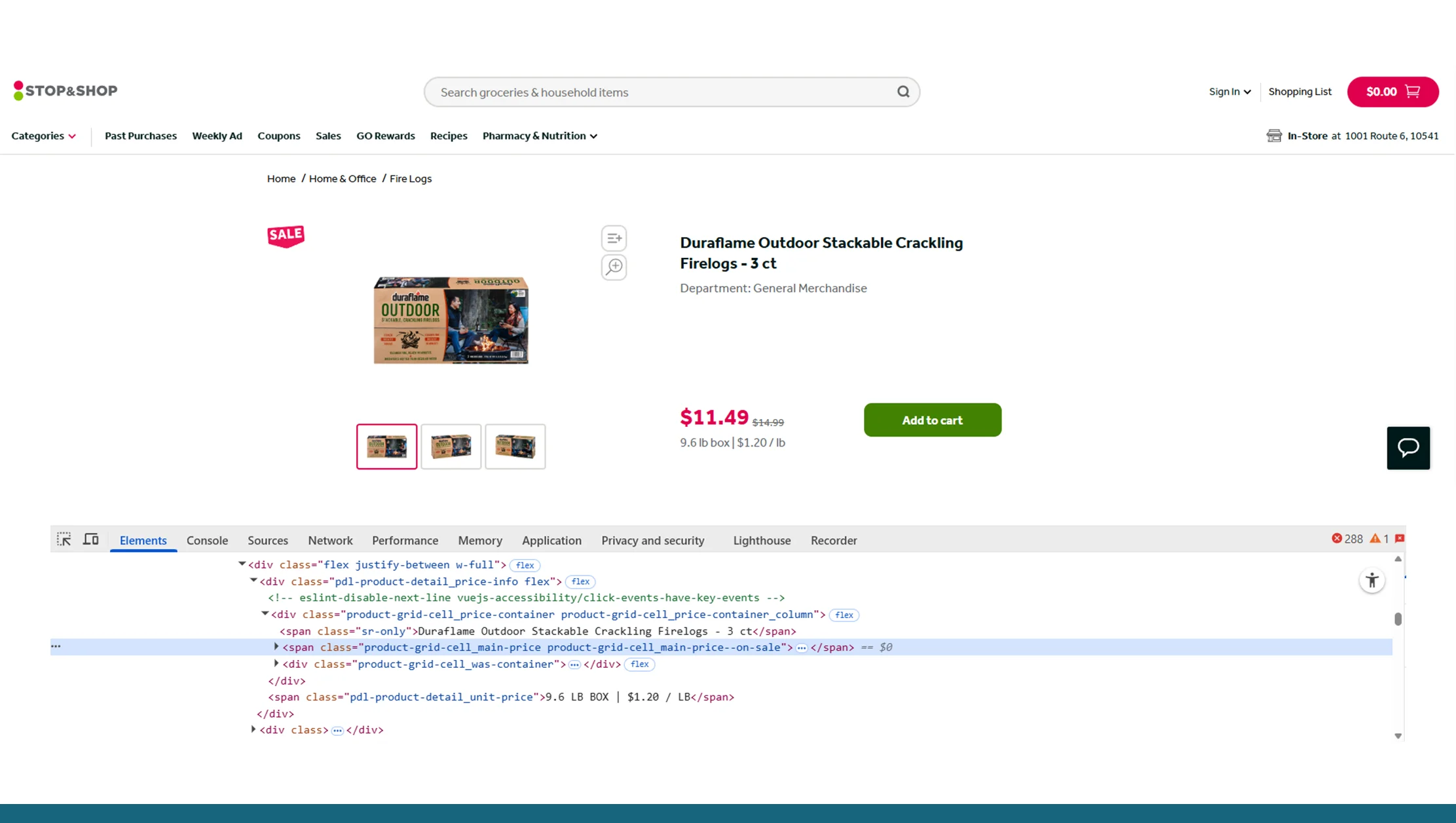

- Many modern e-commerce sites (including Stop & Shop) use client/server JSON endpoints to power search, product pages, and checkout. These endpoints can be discovered in the browser DevTools network tab.

- They are not public documentation and are subject to change.

Third-party scraping APIs / managed services

- These services handle discovery, rendering (if necessary), rate limiting, IP rotation, parsing, and normalization.

- They return structured JSON (product, price, stock, images) and often provide webhooks, CSV export, or dashboards. Examples of such services and pages advertising Stop & Shop scraping capabilities exist.

When an official API is not available, the scraping API route gives you fast time-to-data but brings operational, legal, and reliability considerations (covered later).

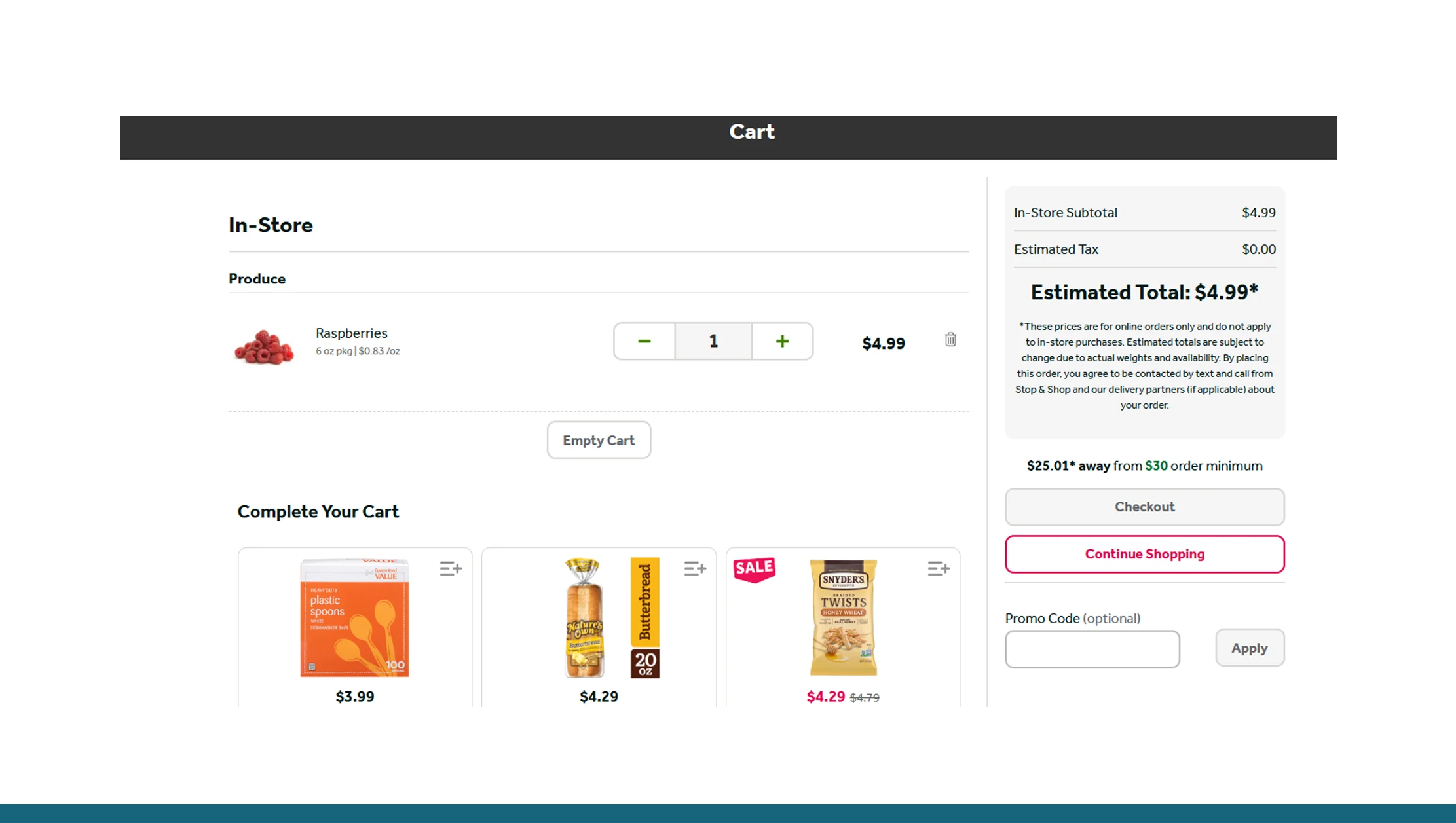

What Data You Can Scrape Using Stop & Shop API Data

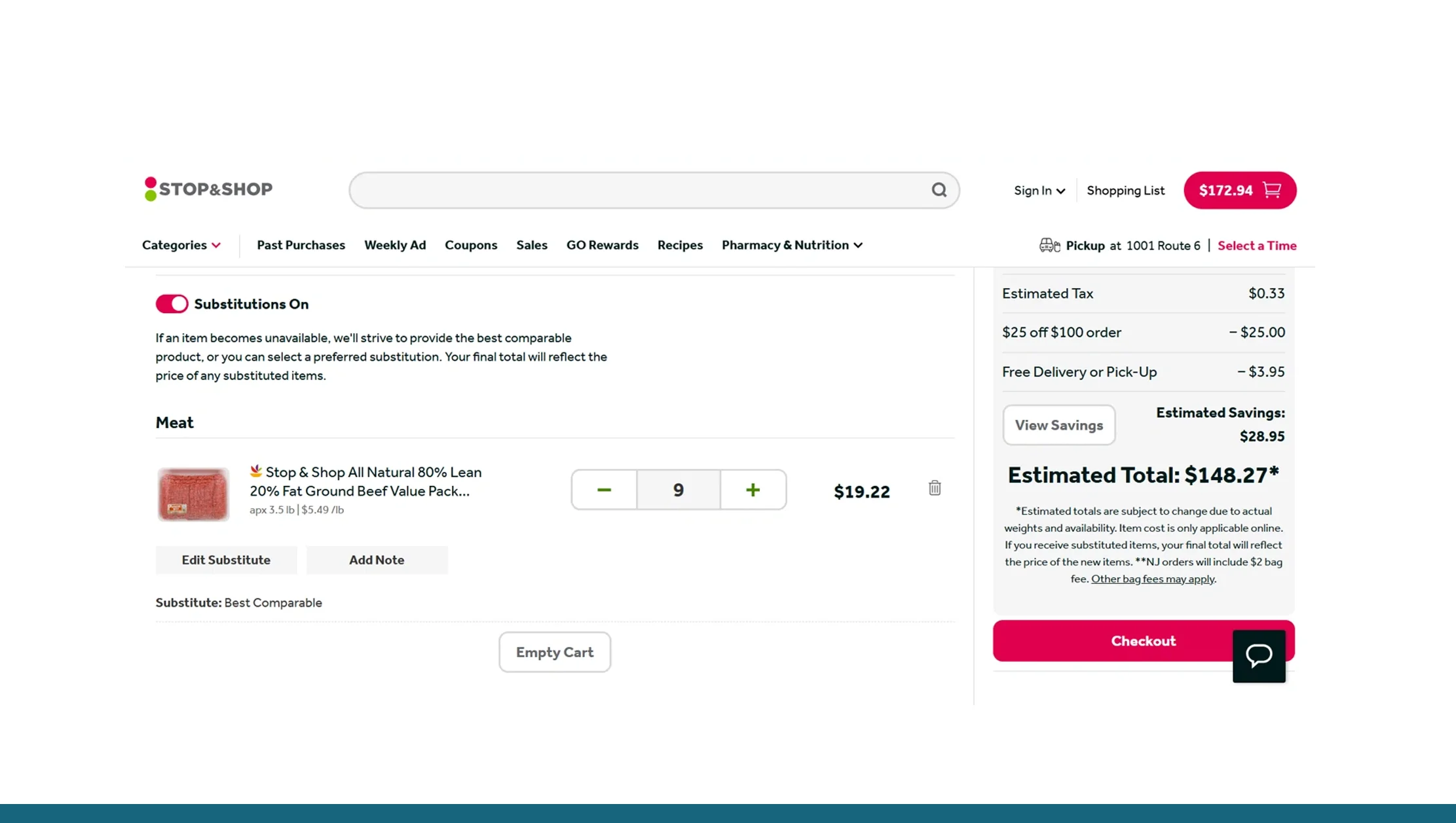

A mature Stop & Shop scraping API will deliver structured records for each item. Typical fields:

- store_id — Stop & Shop store identifier (if data is store-specific)

- product_id / sku — internal product identifier / SKU

- gtin / upc — barcode (when exposed)

- title — full product title

- brand — product brand

- category — category path (e.g., Grocery > Breakfast > Cereals)

- price — current price (display price)

- price_unit — per unit/case price (e.g., $/lb)

- promo_price — sale/discounted price if active

- original_price — list/reg price

- availability — in-stock / out-of-stock / available for pickup/delivery

- stock_level — approximate stock level (if available)

- unit_size — package size (e.g., 500g)

- images — array of image URLs (thumbnail, medium, large)

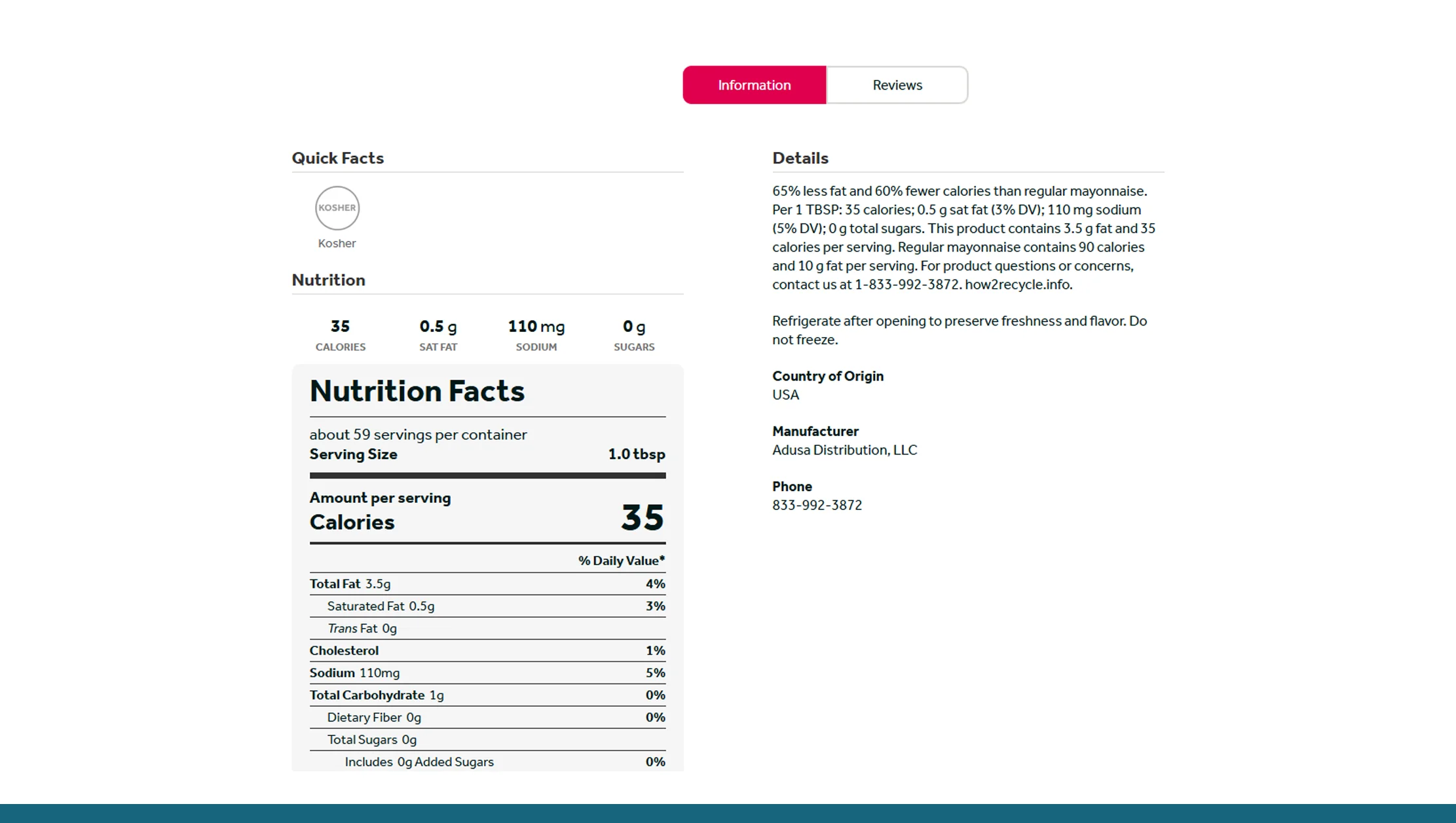

- description — short or long description; ingredients/allergens

- nutrition — nutrition facts (if present)

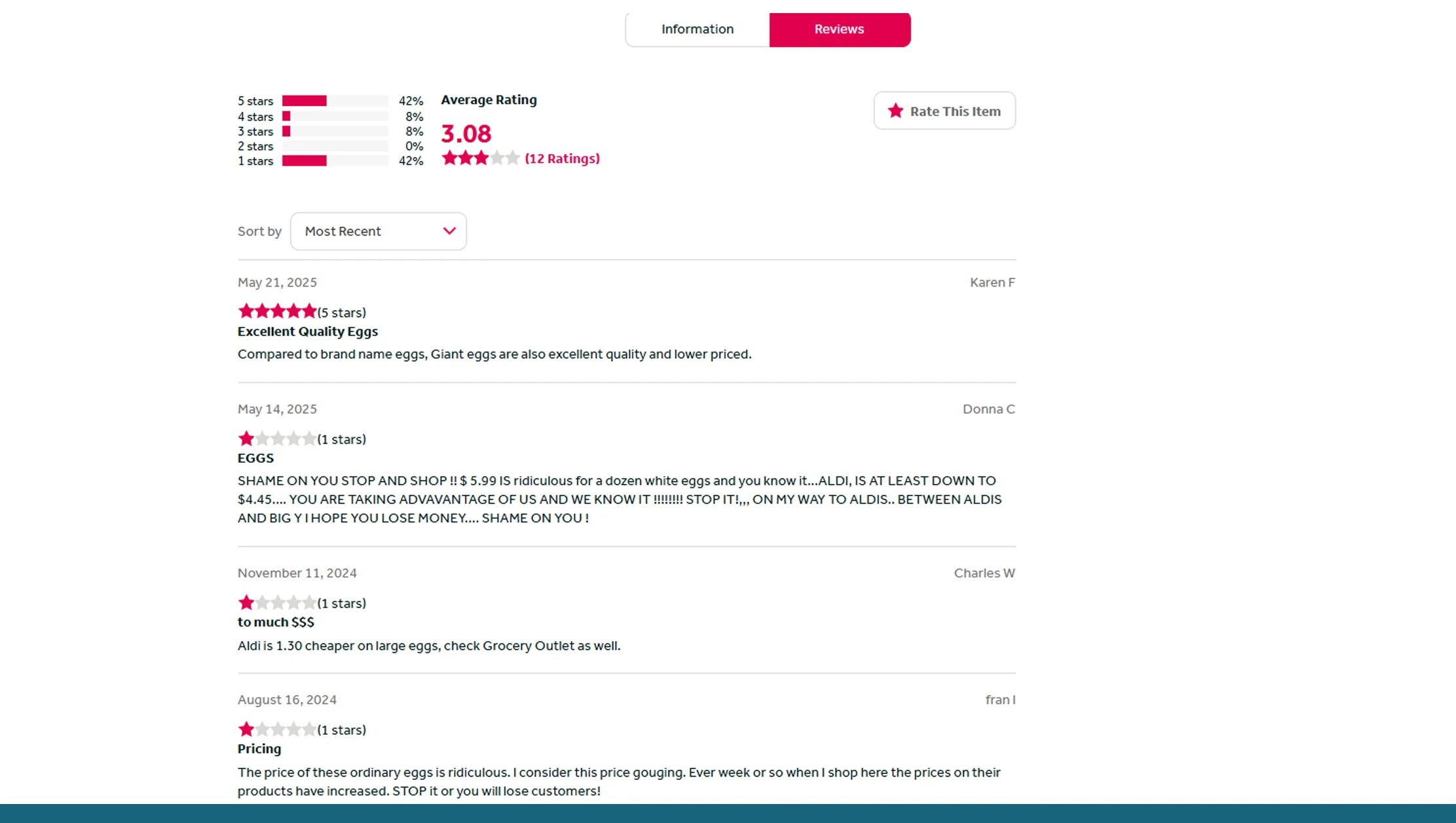

- ratings — customer rating & number of reviews (if shown)

- reviews — optionally scraped review excerpts

- last_updated — timestamp when the record was scraped

- url — canonical product URL on Stop & Shop

- promotions — structured promotions (coupon, BOGO, percent-off, valid_dates)

- fulfillment — delivery / pickup availability, shipping estimates

A good scraping API will normalize data across product types (grocery, deli, pharmacy) and across stores/regions.

Technical Architecture of Stop & Shop Grocery Scraping API

High-level architecture that production systems use:

1. Fetcher layer

- Requests the product, category, or search pages (or calls internal JSON endpoints the front-end uses).

Could be simple HTTP GETs or headless browser renders where JavaScript is required.

2. Rotating network layer

- Manages pools of IPs / proxies, user-agent rotation, TLS fingerprinting minimization, and connection reuse to avoid easy blocking.

- Note: keep this high-level; do NOT instruct on evasion tactics.

3. Renderer (optional)

- Headless browser (e.g., Playwright / Puppeteer) to render JS-heavy pages or to execute required client-side code.

4. Parser / extractor

- Extracts structured data using JSON parsing, DOM selectors, or schema.org microdata.

5. Normalization & enrichment

- Convert to a canonical schema, clean units, normalize currencies, map categories to your taxonomy, dedupe images.

6. Storage & caching

- Time-series or document DB (e.g., PostgreSQL, Elasticsearch, or a cloud data warehouse).

Cache aggressively for expensive endpoints.

7. API layer

- Provide REST or GraphQL endpoints, filtering, pagination, webhooks, and export options (CSV, JSON).

8. Monitoring & alerting

- Track failures, changes to page structure, and data drift.

This layered design makes your scraping API maintainable and scalable.

How Stop & Shop API & Stop & Shop Scraping API Endpoints Work

Stop & Shop runs a modern e-commerce stack. While there is no widely-advertised public developer API, the site and mobile app call internal endpoints that serve JSON for product search, product details, cart, and checkout. Community projects and developer posts confirm the presence of such endpoints; a small open-source Go client exists attempting to interact with Stop & Shop internals, and community scraping discussions report encountering “secret” API endpoints visible in the network debugger.

What to expect:

- Search and category endpoints: return paginated product lists with price and availability metadata.

- Product detail endpoints: provide structured JSON for a product (title, images, price, variants).

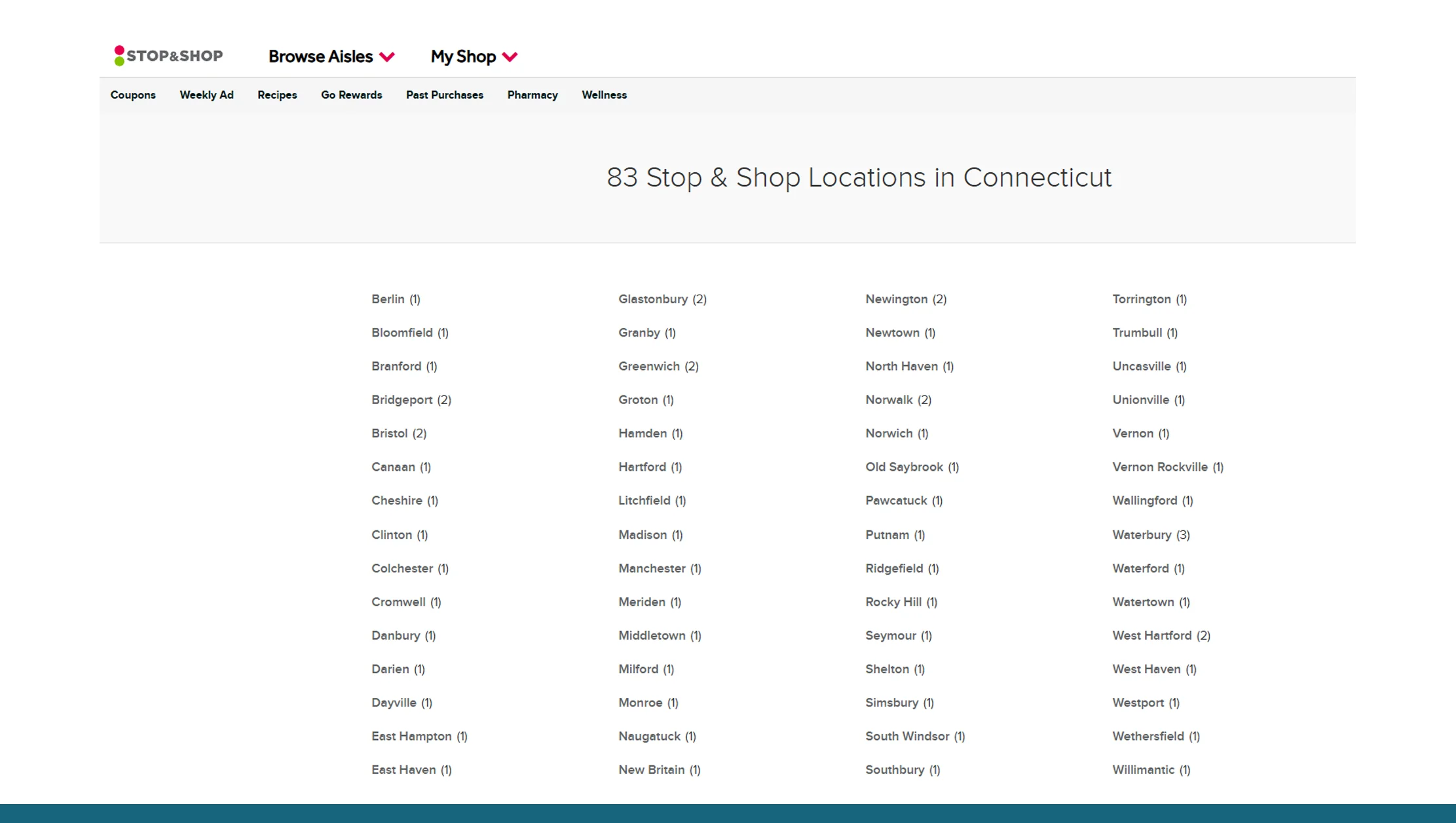

- Location/store endpoints: allow determining what is available at a particular Stop & Shop store for pickup/delivery.

- Dynamic tokens & auth: some calls require session tokens or client-side computed headers; these can change frequently.

- Anti-bot & rate limiting: Stop & Shop and other grocery sites employ rate limits, CAPTCHAs, and bot defenses; expect intermittent blocking.

Because these internals are not documented and can change without notice, a managed scraping API or periodic structural monitoring is important for long-term reliability.

Best Practices for Scraping Stop & Shop API Data Safely

- Respect robots.txt and terms — robots.txt describes crawler rules but is not law; still, treat it as guidance and seek permission when in doubt.

- Use official partner channels when possible — if Stop & Shop offers a partner data feed or vendor API (contact Vendor Relations), prefer that.

- Cache aggressively — grocery catalogs change, but not every minute. Cache product detail responses for a sensible TTL (e.g., minutes for price-sensitive SKUs, hours for static metadata).

- Throttle requests — design polite, distributed scraping that avoids bursts which could degrade target services.

- Monitor structural changes — set up automated tests that detect when expected fields are missing — that’s the earliest sign of a site update.

- Normalize and validate data — prices, units, and SKUs need cleaning and cross-checking.

- Be transparent with customers — if you provide scraped data to customers, disclose data source policies and how often data is refreshed.

- Secure storing of credentials — if you obtain partner credentials or tokens, store them securely and rotate them per security best practices.

High-level operational best practices like these reduce risk and improve data quality. Avoid describing or recommending techniques that would intentionally evade protections or break security mechanisms.

Legal Considerations When Using Stop & Shop Scraping API

Scraping public websites sits in a gray area legally and can vary by jurisdiction. Key considerations:

- Terms of Service (ToS): many retailers prohibit automated scraping in their ToS. Violating ToS can lead to IP blocks or legal actions in extreme cases.

- Robots.txt: not legally binding everywhere, but ignoring it can lead to stronger enforcement.

- Copyright & database rights: collecting large swathes of content (images, entire product catalogs) may run afoul of copyright or database rights in some countries.

- User data & privacy: avoid scraping personal user data or login-protected content.

- Commercial use & fair competition: check local laws; courts in different jurisdictions have ruled differently on scraping cases.

Recommendation: consult legal counsel for production use, especially for large-scale commercial data collection. If you need guaranteed, compliant access, pursue an official partnership or licensed feed from Stop & Shop or consider buying data from reputable data vendors.

Real-World Use Cases of Stop & Shop Grocery Scraping API

Once you have reliable Stop & Shop data, here are projects you can build:

- Price intelligence dashboard — track price changes for a basket of SKUs over time, visualize promotions, and flag opportunities for repricing.

- Availability alerts — notify when an out-of-stock item returns to stock at certain stores.

- Promotions aggregator — compile weekly ad specials, produce competitor comparison reports.

- Catalog enrichment — create a product master with images, UPCs, ingredients, and nutrition data for marketplaces.

- Regional assortment analysis — compare which SKUs are offered in different Stop & Shop regions.

- Demand forecasting — correlate price and stock changes with seasonality and promotions to forecast demand.

Each of these use cases benefits from structured, time-series records and a robust ETL pipeline to store and analyze historical snapshots.

Implementation Examples of Scraping Stop & Shop API Data

Below are conceptual examples showing how a managed Stop & Shop scraping API might behave and how you would call it. These are sample response shapes — actual providers may differ.

Example: simple REST call to fetch product by SKU

Request

GET https://api.yourscrapeprovider.com/stopandshop/products?sku=12345678&store=024

Sample JSON response

{

"store_id": "024",

"sku": "12345678",

"title": "Organic Whole Milk 1L",

"brand": "LocalFarm",

"category": ["Dairy", "Milk"],

"price": 3.99,

"original_price": 4.49,

"promo_price": 3.49,

"availability": "in_stock",

"unit_size": "1 L",

"images": ["https://.../img1.jpg"],

"description": "Organic whole milk from local farms. Pasteurized.",

"gtin": "0123456789012",

"last_updated": "2025-09-12T09:30:00Z",

"url": "https://stopandshop.com/product/12345678"

}

Example: search endpoint

Request

GET https://api.yourscrapeprovider.com/stopandshop/search?q=cereal&limit=24&page=1&store=024

Response — paginated list with total_results, page, products[].

Scaling & Reliability of Stop & Shop Scraping API Solutions

When building or buying a scraping API for Stop & Shop, think about:

- Change detection — pages and JSON schemas change; implement monitors that detect missing fields and auto-alert engineers.

- Backoff & retry — use exponential backoff and jitter for retries; avoid saturating target servers.

- Distributed architecture — use a queue (e.g., Redis / RabbitMQ) to schedule jobs and workers to process them.

- Regional store differences — Stop & Shop inventory and price can vary by store; ensure your crawler can target specific store IDs or ZIP codes.

- Data freshness vs. cost — decide which SKUs need near real-time updates and which can be polled daily to reduce cost.

Note: be careful about discussing specific evasion techniques or recommending tools to defeat CAPTCHAs; stick to resilient, maintainable, and ethical practices.

Third-Party Providers Offering Stop & Shop Grocery Scraping API

If you prefer not to build everything in-house, there are specialized vendors and Web Scraping Services providers who advertise Stop & Shop data extraction as a ready-made service. They typically offer:

- Out-of-the-box endpoints for product detail, search, category, and store inventory.

- Normalization & unified schema so the output stays consistent even when Stop & Shop changes.

- Delivery options (API, FTP/CSV, webhooks).

- Support & SLAs for uptime and change management.

Examples of services and pages that list Stop & Shop scraping APIs exist and advertise features such as real-time extraction and custom data points. If you choose a vendor, validate sample outputs and ask about their change-detection processes and legal compliance steps.

Troubleshooting Common Stop & Shop API & Scraping Issues

Missing fields after a site update

- Symptom: your parser suddenly returns an empty price or images.

- Cause: site changed structure or endpoint parameters.

- Action: fetch a sample product page and re-run parsers; fallback to higher-level search endpoint; alert devs.

CAPTCHA or blocking

- Symptom: requests start returning CAPTCHAs or 403s.

- Action: slow down requests, ensure legitimate headers, use provider support to check if they have a compliant approach.

Store-specific discrepancies

- Symptom: product shows in some ZIP codes but not others.

- Action: re-run with the target store's ZIP / store ID; include fulfillment type (pickup/delivery) in query.

Data drift (incorrect unit parsing)

- Symptom: unit_size shows “12” instead of 12 oz.

- Action: improve unit extraction and normalization library; add unit tests for parsing.

Keeping a test suite of sample pages and golden records is invaluable for swift debugging.

Cost Considerations for Stop & Shop Scraping API & Data Extraction

If you build in-house, costs include development, proxy services, headless-browser infrastructure, and monitoring.

If you buy from a vendor, common pricing models are:

- Per-API-call — pay per request (good for intermittent use).

- Monthly quota — fixed number of requests per month, with overage charges.

- Per-record / per-row — price based on volume of records returned.

- Subscription tiers — basic (low cadence) to premium (real-time + support + custom fields).

When evaluating vendors, ask for sample pricing based on your SKU list and refresh cadence. Cheaper options often break frequently; factor in reliability and support.

Checklist Before Using Stop & Shop Grocery Scraping API

Before you start scraping Stop & Shop data at scale, tick these boxes:

- Confirm what data you actually need (minimize scope).

- Check Stop & Shop terms and any partner/API programs.

- Identify retail regions / store IDs you need to target.

- Choose: build in-house vs managed provider.

- Design data model and retention policy (how long keep historical snapshots?).

- Plan caching / TTL strategy for price vs static metadata.

- Set up monitoring for structural changes and data quality checks.

- Prepare legal review for commercial use.

- Have fallback channels if an endpoint changes unexpectedly.

Sample Code Example to Scrape Stop & Shop API Data

Below is a conceptual example showing how a client might call a managed scraping API to fetch product details. This is intentionally vendor-neutral and omits any details used to circumvent protections.

import requests

from datetime import datetime

API_KEY = "your_api_key_here"

BASE = "https://api.yourscrapeprovider.com/stopandshop"

def fetch_product(sku, store_id):

params = {"sku": sku, "store": store_id}

headers = {"Authorization": f"Bearer {API_KEY}"}

resp = requests.get(f"{BASE}/products", params=params, headers=headers, timeout=15)

resp.raise_for_status()

data = resp.json()

# Basic validation

if not data.get("title") or "price" not in data:

raise ValueError("Incomplete data")

data['fetched_at'] = datetime.utcnow().isoformat() + "Z"

return data

if __name__ == "__main__":

product = fetch_product("12345678", "024")

print(product["title"], product["price"])

This example shows a straightforward client call to a provider API — clean, auditable, and safe.

Community Insights on Stop & Shop Scraping API & Endpoints

Developer discussions and open-source work indicate people have explored Stop & Shop internal APIs and community tools exist to interact with them. Community posts also show that scraping efforts can trigger blocks, and that the internal endpoints are sometimes considered “secret” by users who discover them in browser DevTools. If you plan to rely on internal endpoints directly, be aware they can (and will) change without notice, which is why many teams choose a managed scraping API or build robust detection & maintenance processes.

Alternatives to Stop & Shop API for Grocery Data Extraction

If Stop & Shop scraping becomes brittle or restricted, consider:

- Grocery aggregator APIs — some aggregators and market data providers license grocery catalog data across retailers.

- Manufacturer / brand feeds — for product metadata and images, brands sometimes provide product data feeds that cover multiple retailers.

- Syndicated data providers — vendors that aggregate retail data across chains (paid).

- Official partnerships — vendor/brand partnerships with Stop & Shop for inventory and pricing data sharing.

Each alternative brings tradeoffs on cost, coverage, and freshness.

Example Project Using Stop & Shop Grocery Scraping API Data

A practical project is to monitor a “basket” of core SKUs and generate a weekly report showing:

- Current price vs last week

- Store-by-store availability heatmap

- Promotions that affected basket price

- Recommended repricing action for a marketplace

Architecture:

- Keep a list of SKUs and store ZIPs.

- Poll product endpoints daily, store time-series.

- Run diffs and flag >X% price moves or stock changes.

- Generate weekly PDF/CSV report.

This is the kind of analytics that marketing or category teams find valuable.

Myths & Reality About Stop & Shop API and Scraping

-

Myth: “There’s a public, stable Stop & Shop API for product data for developers.”

Reality: Stop & Shop does not broadly advertise a public product API for external developers; most programmatic access is via internal endpoints or partner feeds. Use third-party providers or partner with Stop & Shop for official access. -

Myth: “If we discover an internal JSON endpoint, it will stay stable.”

Reality: Internal APIs can change without warning; they’re designed for the web/app frontend, not external consumption. -

Myth: “Scraping is always cheap.”

Reality: Proper scraping at scale requires infrastructure, monitoring, and maintenance — cost and effort add up.

Choosing Between In-House Stop & Shop Scraping API vs. Managed Provider

Choose managed provider if:

- You need fast time-to-data.

- You prefer SLA and support.

- You want normalized output and fewer engineering hours.

Build in-house if:

- You have unique data needs or proprietary processing.

- You need very high control over cadence and architecture.

- You can invest in a DevOps/engineering team to maintain it.

When evaluating vendors, request:

- A live demo with your SKUs.

- Their schema contract and sample JSON.

- SLA and change management process.

- Legal & privacy stance.

- Pricing for your expected volume.

Security & Compliance for Stop & Shop Grocery Scraping API Data

- Keep API keys secure and rotate them periodically.

- Ensure PII is not scraped or stored by mistake.

- Limit data access within your organization by role.

- Use HTTPS for all data transfers.

- If storing historical snapshots, ensure retention policies comply with regulations and contractual obligations.

Final Recommendations for Stop & Shop Scraping API Usage

- Start small — identify 50–200 core SKUs across stores to validate the pipeline before scaling.

- Prefer official channels — contact Stop & Shop for partnership options if you need enterprise-level access.

- Use a managed scraping API when you want immediate results and lower maintenance overhead. (There are multiple vendors offering Stop & Shop scraping services.)

- Invest in monitoring — structural change detection and alerting will save the most time.

- Consult legal counsel for commercial-scale scraping use to ensure compliance with terms and local laws.

Resources for Stop & Shop API & Stop & Shop Grocery Scraping API

- Stop & Shop official website (store & catalog): Stop & Shop home.

- Example vendor pages offering Stop & Shop scraping APIs (for comparison and feature ideas).

- Community discussions about scraping Stop & Shop internals — useful to understand the on-the-ground challenges.

- Example open-source library exploring Stop & Shop interactions (GitHub client).

Conclusion: Why Use Stop & Shop Scraping API to Scrape Grocery Data

Stop & Shop data is highly valuable for pricing intelligence, assortment analysis, competitor benchmarking, and promotional tracking. However, access is not straightforward since there isn’t a broadly public Stop & Shop API for developers.

The practical choices are:

- Pursue official partner data access if available.

- Use a third-party Stop & Shop Scraping API for structured product, pricing, and inventory data.

- Or build a custom Stop & Shop Grocery Scraping API pipeline in-house.

For businesses that want ready-to-use, reliable data pipelines, Real Data API is a strong solution. It provides structured grocery datasets, supports Stop & Shop Scraping API integration, and ensures consistent delivery without the hassle of constant maintenance.

By combining the power of Stop & Shop Scraping API solutions with a proven provider like Real Data API, businesses can efficiently scrape Stop & Shop API data, enrich product catalogs, monitor competitors, and make data-driven decisions with confidence.