Introduction

Web scraping has rapidly evolved from a niche developer skill into a cornerstone of modern data-driven decision-making. Businesses today rely on structured, real-time data extracted from websites to gain a competitive edge in industries like e-commerce, finance, travel, and healthcare. While Python and Java dominate conversations about scraping, Ruby is a powerful and elegant language often overlooked in this space. Its clean syntax, strong library ecosystem, and flexibility make it an excellent choice for web scraping projects.

In this guide, we’ll dive deep into web scraping in Ruby, covering everything from the basics to advanced techniques. Whether you’re a beginner or an experienced developer, by the end of this article, you’ll understand how to build a complete scraper in Ruby and know when to scale using Web Scraping Services, Enterprise Web Crawling Services, or a Web Scraping API like RealDataAPI.

Why Ruby for Web Scraping?

Ruby is widely known for powering web frameworks like Ruby on Rails, but its features also make it ideal for scraping tasks:

Readable and Elegant Syntax – Ruby’s natural, almost English-like syntax reduces boilerplate code and makes scrapers easy to write and maintain.

Gem Ecosystem – RubyGems provides access to powerful scraping tools like Nokogiri, Mechanize, HTTParty, and Watir.

Flexibility – Ruby allows seamless integration with APIs, databases, and cloud systems for advanced crawling.

Scalability – Ruby scrapers can evolve into enterprise-grade crawlers with frameworks and third-party APIs such as RealDataAPI.

If you’re already using Ruby for backend development, adding scraping to your toolset will feel like a natural extension.

Key Ruby Libraries for Web Scraping

Ruby’s ecosystem offers a set of gems that simplify different parts of the scraping workflow:

Nokogiri – The most popular Ruby gem for parsing HTML and XML. It lets you navigate documents with CSS selectors or XPath.

HTTParty – A great gem for sending HTTP requests and working with JSON APIs.

Mechanize – Designed for automating interaction with websites, handling forms, cookies, and sessions.

Watir – A headless browser automation gem that’s perfect for scraping JavaScript-heavy websites.

Capybara + Selenium – Useful when you need to scrape dynamic pages that require user interactions.

These tools form the backbone of Ruby-based scrapers and can be combined depending on your use case.

Step 1: Setting Up Your Ruby Environment

Before we start building, ensure Ruby is installed on your system. You can check this by running:

ruby -vIf not installed, download it from ruby-lang.org.

Then, install Bundler to manage dependencies:

gem install bundlerCreate a new project folder and initialize a Gemfile:

mkdir ruby_scraper && cd ruby_scraper

bundle initNow, add the required gems in your Gemfile:

gem 'nokogiri'

gem 'httparty'

gem 'mechanize'Run bundle install to install them.

Step 2: Scraping a Static Website with Nokogiri

Let’s start simple: scraping quotes from a test website.

require 'nokogiri'

require 'open-uri'

url = 'http://quotes.toscrape.com/';

html = URI.open(url)

doc = Nokogiri::HTML(html)

doc.css('.quote').each do |quote|

text = quote.css('.text').text

author = quote.css('.author').text

puts "#{text} - #{author}"

end

Here’s what happens:

- open-uri fetches the webpage.

- Nokogiri parses the HTML.

- CSS selectors extract quotes and authors.

This is the foundation of most scrapers.

Step 3: Scraping with HTTParty

Sometimes, APIs or JSON endpoints are easier to scrape than HTML. Here’s how to use HTTParty:

require 'httparty'

response = HTTParty.get('https://jsonplaceholder.typicode.com/posts')

posts = JSON.parse(response.body)

posts.first(5).each do |post|

puts "#{post['title']} (Post ID: #{post['id']})"

endHTTParty is especially useful for integrating Web Scraping API services like RealDataAPI, which return clean, structured data.

Step 4: Handling Logins with Mechanize

If a site requires login before accessing data, Mechanize is the perfect tool:

require 'mechanize'

agent = Mechanize.new

page = agent.get('http://example.com/login')

login_form = page.form_with(action: '/sessions')

login_form.username = 'your_username'

login_form.password = 'your_password'

dashboard = agent.submit(login_form)

puts dashboard.titleMechanize automatically manages cookies and sessions, making it easier to scrape authenticated websites.

Step 5: Scraping JavaScript-Heavy Websites with Watir

Modern websites often rely on JavaScript to render content dynamically. For those, Watir works like Selenium:

require 'watir'

browser = Watir::Browser.new :chrome, headless: true

browser.goto('https://example.com')

puts browser.h1.text

browser.closeWatir spins up a real browser session, executes JavaScript, and extracts rendered content.

Best Practices for Web Scraping in Ruby

While Ruby makes scraping easier, you must follow best practices:

- Respect Robots.txt – Always check if scraping is allowed.

- Avoid Overloading Servers – Use rate limiting and polite delays.

- Rotate Proxies & User-Agents – Prevent IP blocking by simulating real users.

- Handle Errors Gracefully – Add retries for failed requests.

- Use APIs When Possible – If the site has an official or third-party API like RealDataAPI, use it instead of scraping raw HTML.

When to Use Web Scraping Services?

As projects scale, maintaining scrapers can become resource-heavy. That’s when outsourcing to professional Web Scraping Services or leveraging Enterprise Web Crawling Services makes sense. These solutions provide:

- Scalability – Extract millions of pages daily without infrastructure hassle.

- Data Accuracy – Dedicated teams ensure clean, structured datasets.

- Customization – Tailored scrapers for niche industries like travel, real estate, or finance.

- Compliance – Services help maintain ethical and legal scraping practices.

Scaling Ruby Scrapers with RealDataAPI

While Ruby gems are powerful, scaling large scrapers often requires more robust infrastructure. That’s where RealDataAPI comes in.

- Plug-and-Play API – Instead of maintaining scrapers, just query the API and get structured JSON.

- Geo-Targeting – Scrape localized data from different regions.

- Anti-Bot Bypass – Handle CAPTCHAs, IP bans, and rotating proxies automatically.

- Enterprise Solutions – Perfect for companies that need Enterprise Web Crawling Services with guaranteed uptime and support.

By combining Ruby scrapers with RealDataAPI, you can enjoy the flexibility of custom code while outsourcing the heavy lifting of scaling.

Example: Ruby + RealDataAPI

Here’s how you can fetch structured data with Ruby and RealDataAPI:

require 'httparty'

require 'json'

api_key = "YOUR_API_KEY"

url = "https://api.realdataapi.com/scrape?url....com";

response = HTTParty.get(url, headers: { "Authorization" => "Bearer #{api_key}" })

data = JSON.parse(response.body)

puts dataThis simple integration allows you to pull structured data without worrying about parsing or anti-bot measures.

Use Cases of Web Scraping in Ruby

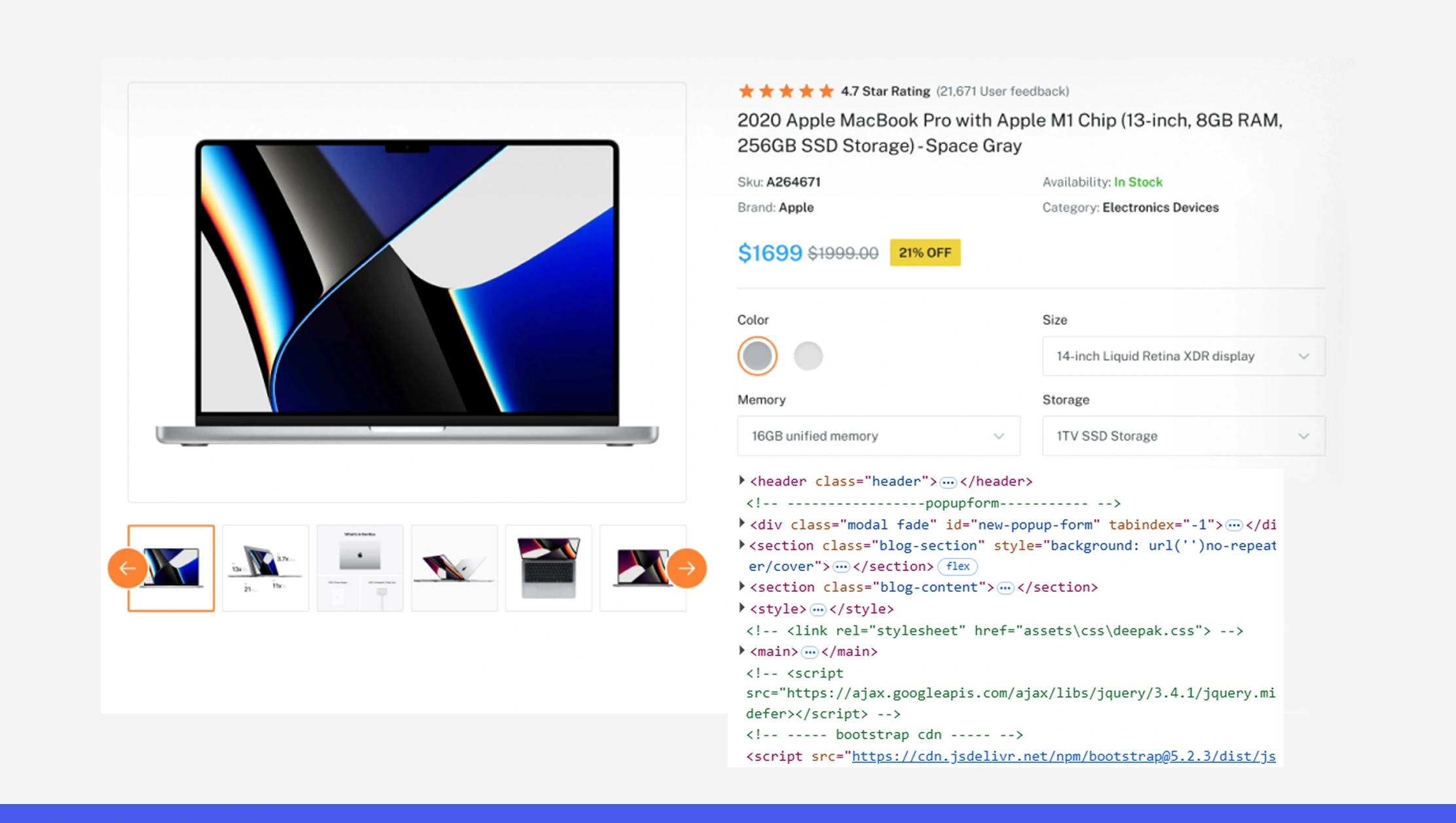

E-Commerce – Extract product details, prices, and reviews for competitive analysis.

- Finance – Track stock prices, news sentiment, and cryptocurrency data.

- Travel – Aggregate hotel and flight information.

- Healthcare – Collect clinical trial data or pharmaceutical research.

- Job Market – Scrape job boards for openings and salary insights.

Ruby’s flexibility makes it suitable across industries, especially when paired with Web Scraping Services or APIs like RealDataAPI.

Challenges of Web Scraping in Ruby

Despite its strengths, Ruby-based scraping comes with challenges:

- Performance – Ruby is slower than compiled languages like Java.

- Concurrency – Requires extra effort with gems like Sidekiq or EventMachine.

- Dynamic Websites – Need headless browsers, which can increase resource usage.

For small to medium-scale projects, Ruby works perfectly. But for enterprise-level projects, consider outsourcing to Enterprise Web Crawling Services or integrating RealDataAPI.

Future of Web Scraping with Ruby

Ruby continues to evolve, and its ecosystem supports modern scraping needs. With increasing reliance on APIs, headless browsers, and cloud-based scrapers, Ruby remains a solid choice for developers who value clean code and rapid prototyping.

In the future, expect:

- Better Web Scraping API integrations.

- Enhanced libraries for handling JavaScript-heavy sites.

- Hybrid solutions combining Ruby scrapers with RealDataAPI for scalability.

Conclusion

Web scraping in Ruby combines elegance, flexibility, and power. From parsing static HTML with Nokogiri to navigating dynamic content with Watir, Ruby provides developers with all the tools they need. But as scraping projects grow in scale and complexity, relying solely on custom scripts can be inefficient.

That’s where professional Web Scraping Services, Enterprise Web Crawling Services, and powerful solutions like RealDataAPI step in to ensure scalability, reliability, and compliance.

If you’re serious about building scrapers with Ruby, start small, master the basics, and then integrate with enterprise-grade APIs for maximum efficiency. Ruby may not be the loudest language in the scraping world, but it certainly is one of the most elegant and effective.