Introduction

In today’s digital age, data is often referred to as the new oil. Businesses rely on data to analyze markets, understand customers, monitor competitors, and make informed decisions. But most of this data is trapped inside websites, unstructured and inaccessible. That’s where web scraping comes in.

Python has become the go-to programming language for building scrapers because of its simplicity, rich ecosystem of libraries, and ability to scale. Whether you are a beginner curious about automating data collection or a business looking for Enterprise Web Crawling Services, Python gives you the flexibility to build scrapers tailored to your needs.

This ultimate guide will walk you through everything you need to know about web scraping with Python—from the basics to advanced scraping techniques, libraries, best practices, and enterprise-level solutions like RealDataAPI and Web Scraping Services.

What is Web Scraping?

Web scraping is the process of automatically extracting information from websites. It involves:

- Sending a request to a website.

- Retrieving the HTML content.

- Parsing the data to extract meaningful information (like product details, job listings, or reviews).

- Storing the data in a structured format (CSV, JSON, database).

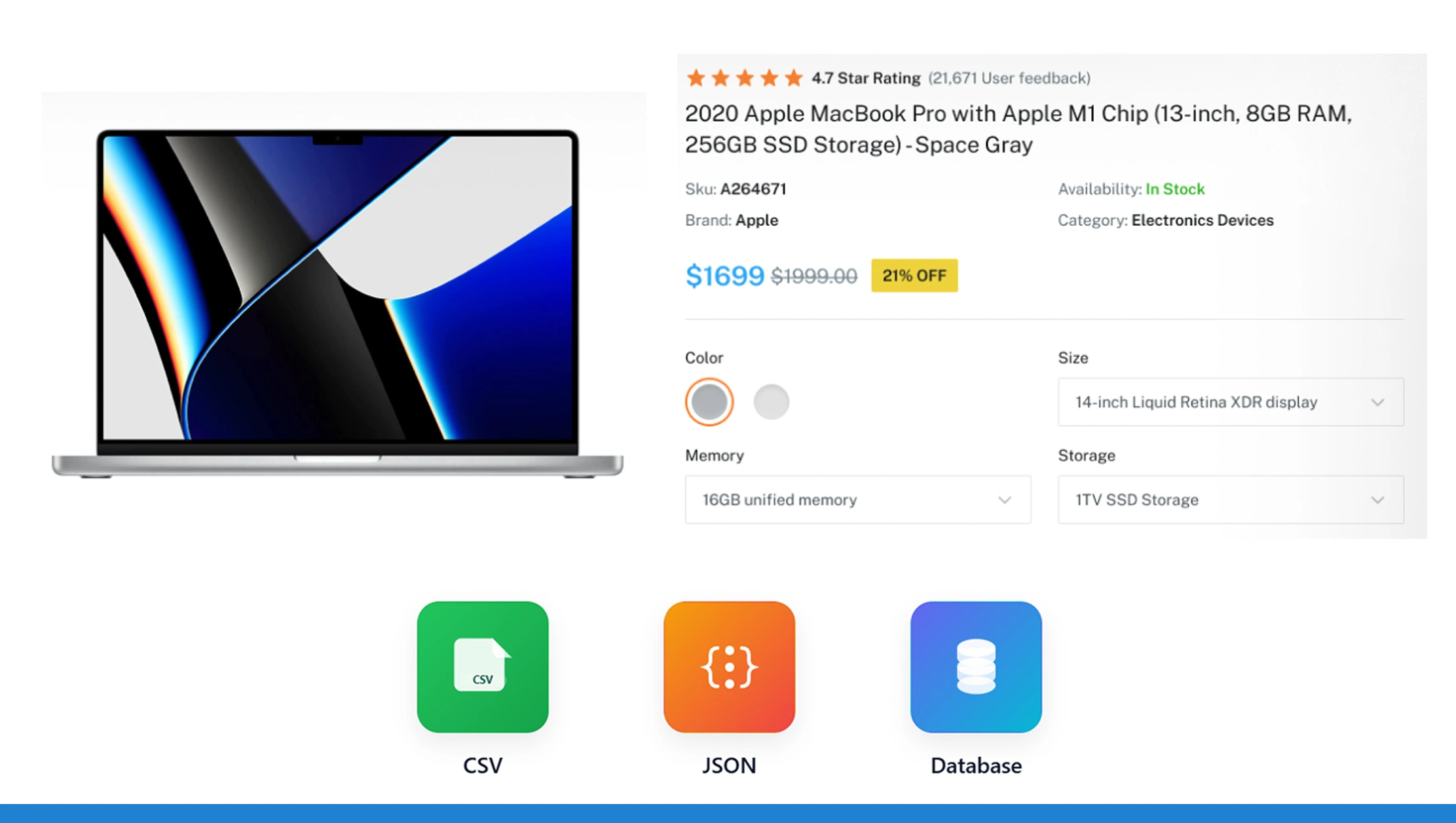

For example, scraping an e-commerce site could give you details like:

- Product names

- Prices

- Ratings

- Stock availability

Instead of manually copying this data, scrapers automate the entire process at scale.

Why Use Python for Web Scraping?

Python dominates the scraping ecosystem because:

- Easy to Learn: Simple syntax for beginners and professionals.

- Rich Libraries: Libraries like BeautifulSoup, Scrapy, and Requests make scraping efficient.

- Scalability: Frameworks allow scraping millions of pages with minimal effort.

- Community Support: A vast developer community ensures solutions for every scraping problem.

- Integration Friendly: Works well with Web Scraping API solutions like RealDataAPI, making scraping scalable for businesses.

Harness Python for web scraping—automate data collection, gain real-time insights, and drive smarter business decisions effortlessly today.

Get Insights Now!Python Libraries for Web Scraping

Here are the most popular Python libraries used to build scrapers:

1. Requests

Used to send HTTP requests and fetch the HTML content of web pages.

import requests

url = "https://example.com"

response = requests.get(url)

print(response.text)2. BeautifulSoup

Parses HTML and XML documents to extract specific data.

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.text, "html.parser")

title = soup.find("h1").text

print(title)3. Scrapy

A powerful framework for large-scale crawling and scraping.

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = ["http://quotes.toscrape.com"]

def parse(self, response):

for quote in response.css("div.quote"):

yield {

"text": quote.css("span.text::text").get(),

"author": quote.css("small.author::text").get(),

}4. Selenium

Automates browsers to scrape dynamic sites built with JavaScript.

from selenium import webdriver

driver = webdriver.Chrome()

driver.get("https://example.com")

print(driver.page_source)

driver.quit()5. Pandas

For cleaning and storing scraped data.

import pandas as pd

data = {"Product": ["Laptop"], "Price": ["$1200"]}

df = pd.DataFrame(data)

df.to_csv("products.csv", index=False)Step-by-Step Guide: Building a Scraper with Python

Let’s build a simple scraper that extracts product data from an e-commerce site.

Step 1: Install Required Libraries

pip install requests beautifulsoup4 pandasStep 2: Send a Request

import requests

from bs4 import BeautifulSoup

url = "https://example.com/products"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")Step 3: Extract Data

products = []

for item in soup.select(".product"):

title = item.select_one(".title").text

price = item.select_one(".price").text

products.append({"title": title, "price": price})Step 4: Save Data

import pandas as pd

df = pd.DataFrame(products)

df.to_csv("products.csv", index=False)Now you have a CSV file with structured product data—ready for analysis or integration into your system.

Handling Dynamic Websites

Many modern websites are powered by JavaScript, meaning data doesn’t load in the initial HTML. Python offers two ways to handle this:

1. Selenium – Automates browsers to interact with JavaScript.

2. API Scraping – Many websites fetch data from APIs in the background. Using network inspection, you can capture these API calls and replicate them with Python’s requests library.

For businesses, relying on manual Selenium scripts can be inefficient. Instead, solutions like RealDataAPI act as a Web Scraping API, handling dynamic content and anti-bot measures for you.

Scaling Web Scraping with Python

For small projects, Python scripts work fine. But businesses often require scraping millions of pages daily. Challenges at this scale include:

- IP bans and rate limits

- CAPTCHA solving

- Data quality and deduplication

- Infrastructure costs

This is where Enterprise Web Crawling Services come into play. With solutions like RealDataAPI, companies can scrape at scale without worrying about proxies, servers, or bot detection.

Scale your web scraping with Python—efficiently extract large datasets, automate workflows, and unlock actionable insights for smarter business decisions today.

Get Insights Now!Best Practices for Web Scraping with Python

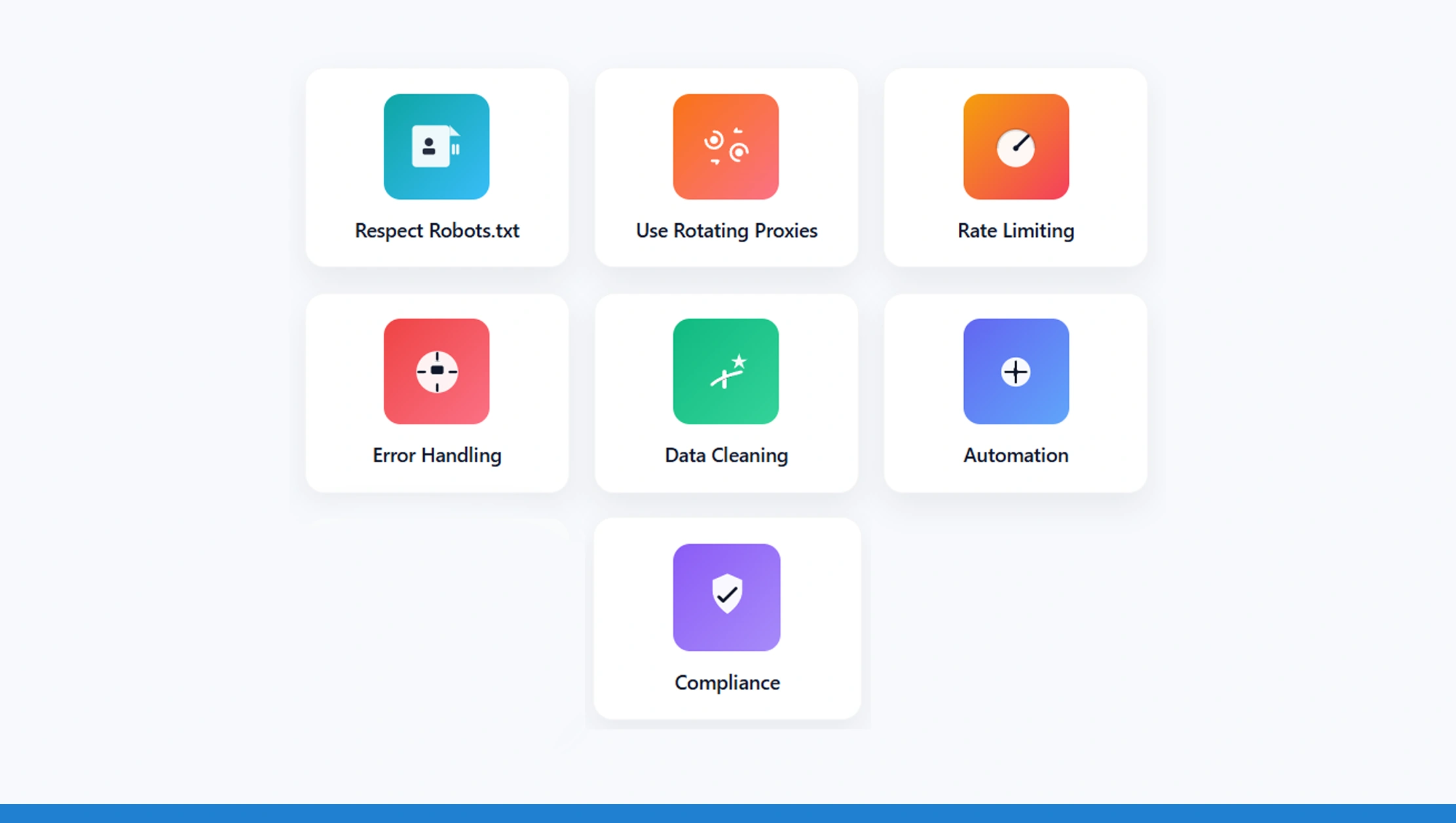

- Respect Robots.txt Check website policies before scraping.

- Use Rotating Proxies Avoid IP blocks by rotating IPs.

- Rate Limiting Don’t overload servers; use delays.

- Error Handling Handle exceptions like timeouts or missing data.

- Data Cleaning Always validate and structure scraped data.

- Automation Use schedulers (cron jobs, Airflow) to automate scraping.

- Compliance Ensure scraping aligns with legal and ethical standards.

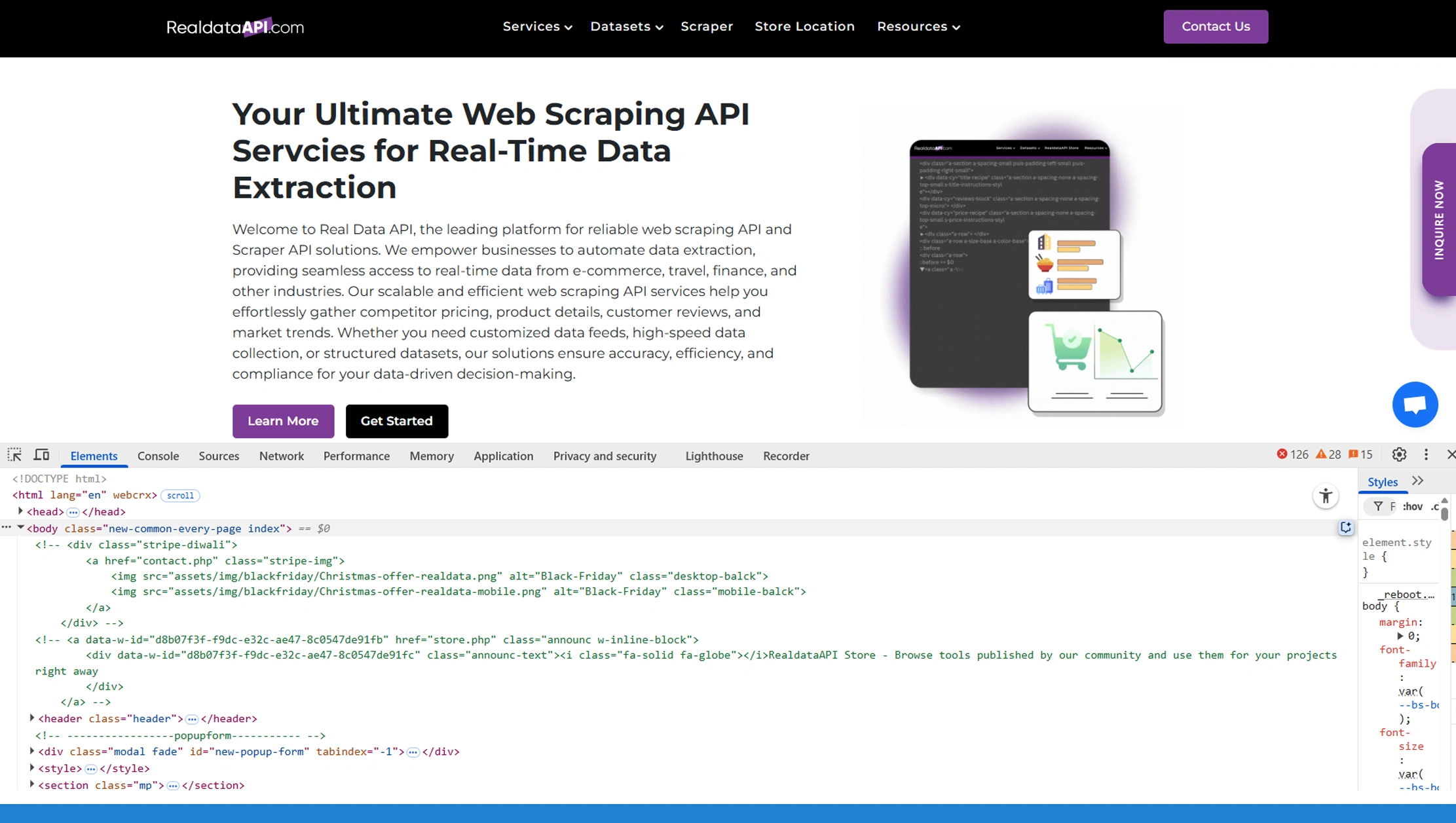

RealDataAPI: Web Scraping Simplified

While Python is powerful for scraping, building and maintaining scrapers at scale is resource-intensive. That’s why businesses rely on RealDataAPI.

Why RealDataAPI?

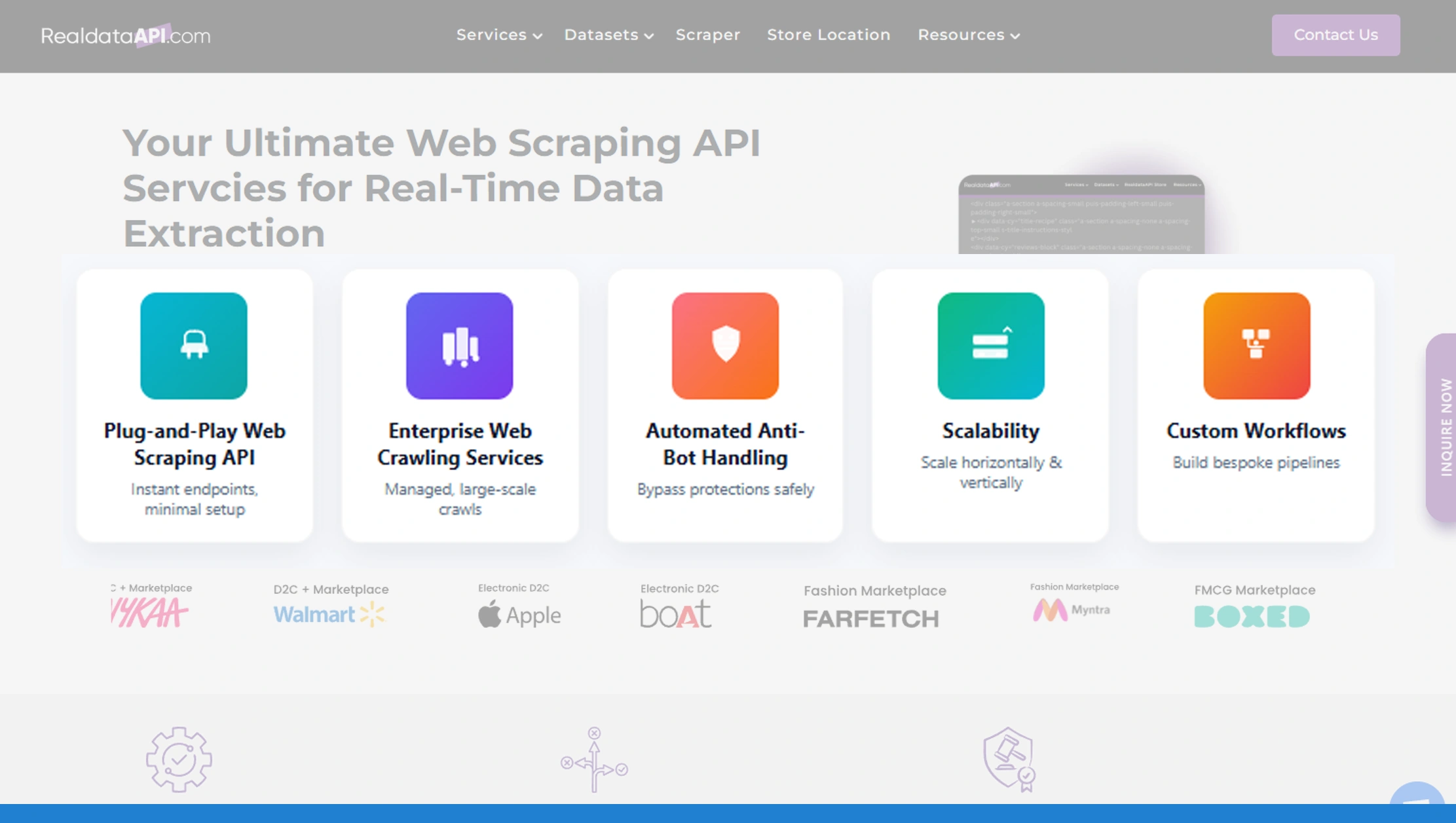

- Plug-and-Play Web Scraping API – Extract structured data with simple API calls.

- Enterprise Web Crawling Services - Scrape millions of pages across industries.

- Automated Anti-Bot Handling - Built-in proxies, CAPTCHA solving, and session management.

- Scalability - From 100 pages to 100 million.

- Custom Workflows - Extract exactly the data you need.

Instead of writing and debugging complex Python scripts, companies can simply integrate RealDataAPI into their systems and start receiving ready-to-use data.

Use Cases of Web Scraping with Python & RealDataAPI

- E-commerce Intelligence

Scrape competitor prices, reviews, and stock availability to build dynamic pricing strategies.

- Job Market Analysis

Gather job postings from multiple portals to identify hiring trends.

- Real Estate Insights

Extract property listings and rental trends for market research.

- Travel Aggregation

Scrape flight and hotel data to build comparison platforms.

- Finance & Investment

Monitor stock tickers, financial reports, and news sentiment.

When to Use Python Scripts vs. RealDataAPI?

| Requirement | Python Scripts | RealDataAPI |

|---|---|---|

| Small projects | ✅ | ✅ |

| Handling CAPTCHAs | ❌ Manual setup | ✅ Automated |

| Scaling to millions | ❌ Difficult | ✅ Scalable |

| Maintenance | ❌ Frequent updates | ✅ Managed |

| Output format | Custom coding needed | Ready JSON/CSV |

For individuals or hobby projects, Python scripts are perfect. For enterprises, Web Scraping Services like RealDataAPI save time, money, and effort.

Future of Web Scraping

The future of scraping is moving towards API-first solutions. Instead of writing one-off scrapers, businesses are adopting Web Scraping APIs that offer:

- Prebuilt scraping logic

- Automated error handling

- Scalable infrastructure

- Compliance monitoring

This trend ensures that companies can focus on analyzing data rather than wasting resources extracting it.

Conclusion

Python remains the most versatile language for web scraping. From beginners learning BeautifulSoup to enterprises scaling with Scrapy clusters, Python powers the world of data extraction. But as scraping needs grow, so does the complexity.

That’s why RealDataAPI exists—to take the hassle out of scraping. With its Web Scraping API and Enterprise Web Crawling Services, RealDataAPI delivers high-quality, structured data at scale, allowing businesses to focus on what truly matters: insights and growth.

Whether you’re building your first Python scraper or running global data pipelines, combining Python with RealDataAPI gives you the best of both worlds—flexibility, scalability, and reliability.

Start small, experiment with Python scrapers, and when you’re ready to scale, let RealDataAPI power your data-driven future!