Introduction

Web scraping has quickly become one of the most powerful techniques in the digital world for extracting, analyzing, and repurposing data from websites. Whether you’re a developer, data analyst, or business strategist, scraping data from the web can unlock valuable insights that drive smarter decisions.

Go (or Golang), Google’s open-source programming language, is gaining immense popularity in the web scraping ecosystem. Its speed, simplicity, and built-in concurrency make it an excellent choice for scraping projects—whether small-scale scripts or Enterprise Web Crawling Services.

In this comprehensive guide, we’ll walk you through everything you need to know about web scraping with Go. From setting up your environment to building your first scraper, handling dynamic sites, and integrating with a Web Scraping API like RealDataAPI, this tutorial is perfect for both beginners and intermediate developers.

Why Use Go for Web Scraping?

Before diving into code, let’s explore why Go has become such a strong option for scraping:

Performance & Speed

Go is a compiled language, making it faster than many interpreted languages like Python or Ruby. When scraping large datasets or crawling multiple pages, speed can make a huge difference.

Concurrency Made Easy

Go’s goroutines allow scrapers to fetch thousands of pages in parallel without the complexity of traditional multithreading. This is vital for Enterprise Web Crawling Services, where scalability is key.

Simplicity & Readability

Go’s syntax is clean and beginner-friendly, making it easier to build and maintain scrapers over time.

Strong Community & Libraries

Libraries like Colly and Goquery make it possible to scrape data with minimal setup while handling modern web structures effectively.

Setting Up Your Go Environment

Before you begin building your scraper, you’ll need a proper Go setup.

Install Go

Download and install Go from the official website.

Verify installation:

go version

Create a Project Folder

mkdir go-scraper

cd go-scraper

Initialize the Go Module

go mod init go-scraper

Install Dependencies

We’ll use two key libraries:

Colly → A powerful scraping framework.

Goquery → Provides jQuery-like syntax for HTML parsing.

Install them:

go get github.com/gocolly/colly

go get github.com/PuerkitoBio/goquery

Writing Your First Web Scraper in Go

Let’s start with a simple example using Colly.

Example: Scraping Quotes from a Website

package main

import (

"fmt"

"log"

"github.com/gocolly/colly"

)

func main() {

// Create a new collector

c := colly.NewCollector()

// Extract quotes from <span class="text">

c.OnHTML("span.text", func(e *colly.HTMLElement) {

fmt.Println("Quote:", e.Text)

})

// Extract author names

c.OnHTML("small.author", func(e *colly.HTMLElement) {

fmt.Println("Author:", e.Text)

})

// Handle errors

c.OnError(func(_ *colly.Response, err error) {

log.Println("Something went wrong:", err)

})

// Visit target website

c.Visit("http://quotes.toscrape.com")

}

How It Works:

- Collector: Colly manages crawling and parsing.

- OnHTML: Defines what data to capture from the page.

- Visit: Starts crawling the given URL.

Run the scraper:

go run main.go

You’ll see a list of quotes and authors printed in your terminal.

Scraping Multiple Pages

Real-world scrapers often need to handle pagination. With Colly, it’s simple:

c.OnHTML("li.next a", func(e *colly.HTMLElement) {

nextPage := e.Request.AbsoluteURL(e.Attr("href"))

c.Visit(nextPage)

})

This automatically follows the “next” button until there are no more pages left.

Using Goquery for Advanced HTML Parsing

Colly handles crawling, but sometimes you want more control over parsing. That’s where Goquery shines.

package main

import (

"fmt"

"log"

"net/http"

"github.com/PuerkitoBio/goquery"

)

func main() {

res, err := http.Get("http://quotes.toscrape.com")

if err != nil {

log.Fatal(err)

}

defer res.Body.Close()

doc, err := goquery.NewDocumentFromReader(res.Body)

if err != nil {

log.Fatal(err)

}

doc.Find("span.text").Each(func(i int, s *goquery.Selection) {

quote := s.Text()

fmt.Println("Quote:", quote)

})

}

With Goquery, you can select and manipulate HTML elements using jQuery-style selectors.

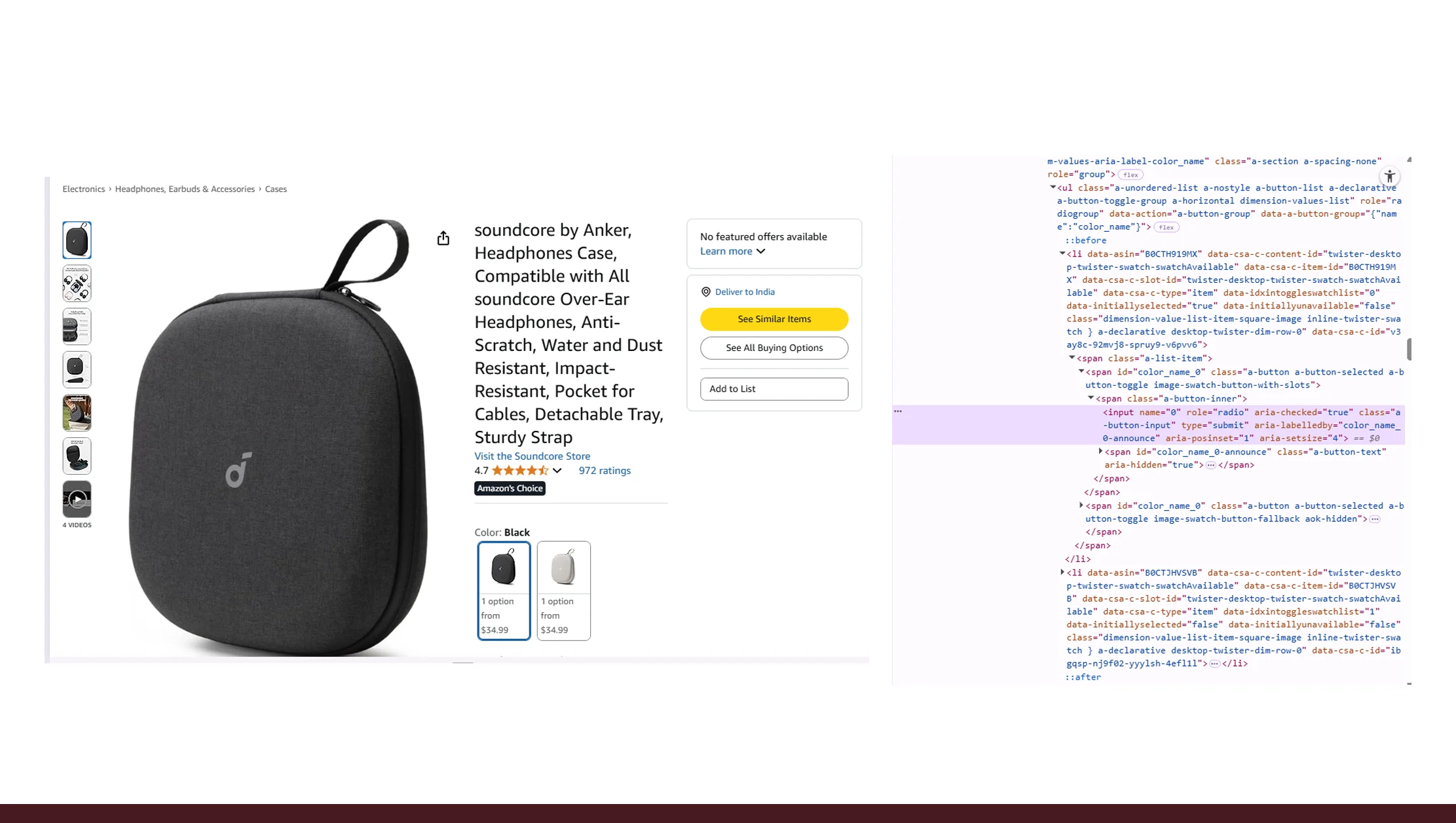

Handling Dynamic Websites with Go

Many modern websites use JavaScript to load data dynamically, which traditional scrapers can’t handle directly. For this, you have options:

Scrape API Endpoints

Inspect network requests to find the API powering the site and fetch JSON directly.

Use a Headless Browser

Tools like chromedp let you control a headless Chrome browser in Go:

go get github.com/chromedp/chromedp

Example:

import "github.com/chromedp/chromedp"

With chromedp, you can navigate, click, and extract data from JavaScript-heavy sites.

Integrate with a Web Scraping API

Services like RealDataAPI simplify this by handling dynamic rendering, IP rotation, and anti-bot protection for you.

Avoiding Getting Blocked

When scraping at scale, websites often try to detect and block bots. To avoid this:

- Set User-Agents

- Rotate Proxies/IPs

- Throttle Requests

- Respect Robots.txt

Colly allows customization:

c.UserAgent = "Mozilla/5.0 (compatible; MyScraper/1.0)"

c.Limit(&colly.LimitRule{DomainGlob: "*", Parallelism: 2, Delay: 2})

Scaling Up with RealDataAPI

When your project grows beyond a simple script, maintaining proxies, handling captchas, and scaling crawlers can get complex. That’s where RealDataAPI comes in.

Web Scraping Services offered by RealDataAPI simplify scraping at scale.

With Enterprise Web Crawling Services, businesses can extract millions of records efficiently.

A dedicated Web Scraping API ensures clean, structured data delivery without worrying about infrastructure.

By integrating RealDataAPI into your Go scraper, you can focus on analyzing data instead of dealing with scraping hurdles.

Example integration:

res, err := http.Get("https://api.realdataapi.com/scrape?url....com")

This way, RealDataAPI handles the crawling while you just consume structured JSON.

Storing Scraped Data

Once you’ve scraped the data, you’ll likely need to save it for analysis.

Options include:

- CSV/JSON Files

- Databases (MySQL, PostgreSQL, MongoDB)

- Cloud Storage (AWS S3, GCP Storage)

Example: Save data to CSV:

file, err := os.Create("quotes.csv")

writer := csv.NewWriter(file)

defer writer.Flush()

writer.Write([]string{"Quote", "Author"})

writer.Write([]string{"Life is short", "Author Name"})

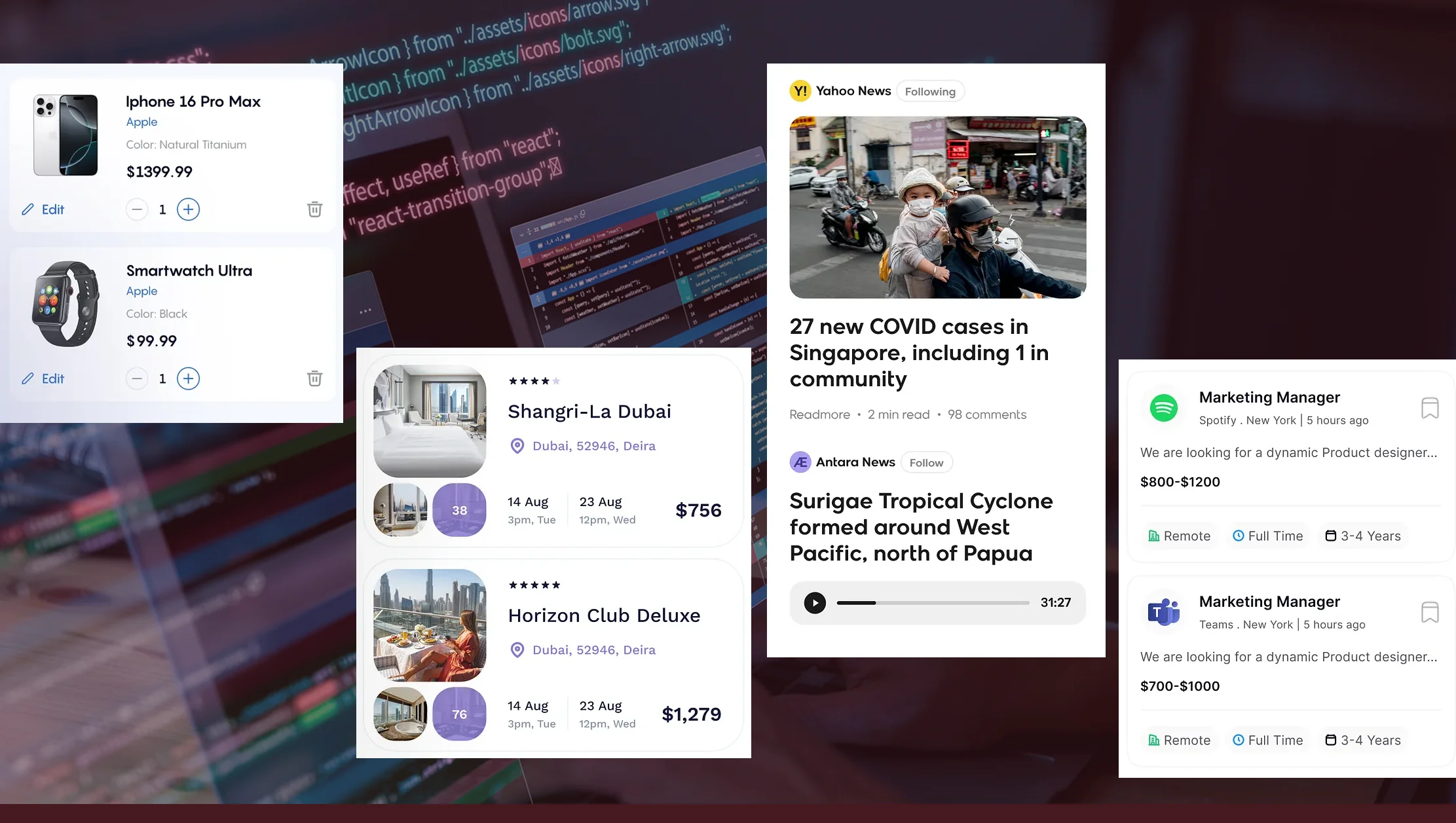

Real-World Use Cases of Web Scraping in Go

E-commerce Monitoring

Track competitor prices, product availability, and customer reviews.

Travel Aggregation

Collect flight, hotel, and rental data for price comparison platforms.

Job Market Analysis

Scrape job postings to analyze salary trends and demand.

News & Media Scraping

Aggregate headlines and articles for sentiment analysis.

Data Science Projects

Feed scraped data into ML models for predictions and insights.

Best Practices for Web Scraping in Go

- Start small and test frequently.

- Use concurrency carefully to avoid overwhelming target servers.

- Implement error handling and retry logic.

- Always check the legal aspects of scraping the target website.

For large-scale projects, integrate with RealDataAPI for reliable scraping infrastructure.

Conclusion

Web scraping in Go is a powerful way to collect data quickly and efficiently. With libraries like Colly, Goquery, and chromedp, developers can build scrapers ranging from simple scripts to large-scale crawlers.

However, managing scraping infrastructure at scale can be complex. That’s why businesses often rely on Web Scraping Services, Enterprise Web Crawling Services, or a dedicated Web Scraping API like RealDataAPI.

If you’re just starting, practice by scraping small websites. As your needs grow, Go’s speed and concurrency will make it an excellent tool to scale. And when you’re ready for enterprise-level data extraction, RealDataAPI will be your best partner.

.webp)

.webp)