Introduction

Web scraping has become an essential technique for businesses, researchers, and developers who want to collect structured data from websites. While Python, JavaScript, and PHP are the most common languages for scraping, Elixir—a functional, concurrent, and fault-tolerant language—offers unique advantages that make it a great choice for web scraping at scale.

In this blog, we’ll walk you through how to start web scraping with Elixir, its benefits, tools, libraries, step-by-step scraping examples, and how it compares with other languages. By the end, you’ll have the knowledge to confidently begin scraping with Elixir and integrate it into your data extraction workflows.

We’ll also highlight how you can complement your efforts using advanced Web Scraping Services, Enterprise Web Crawling Services, and modern Web Scraping API solutions like RealDataAPI for large-scale data requirements.

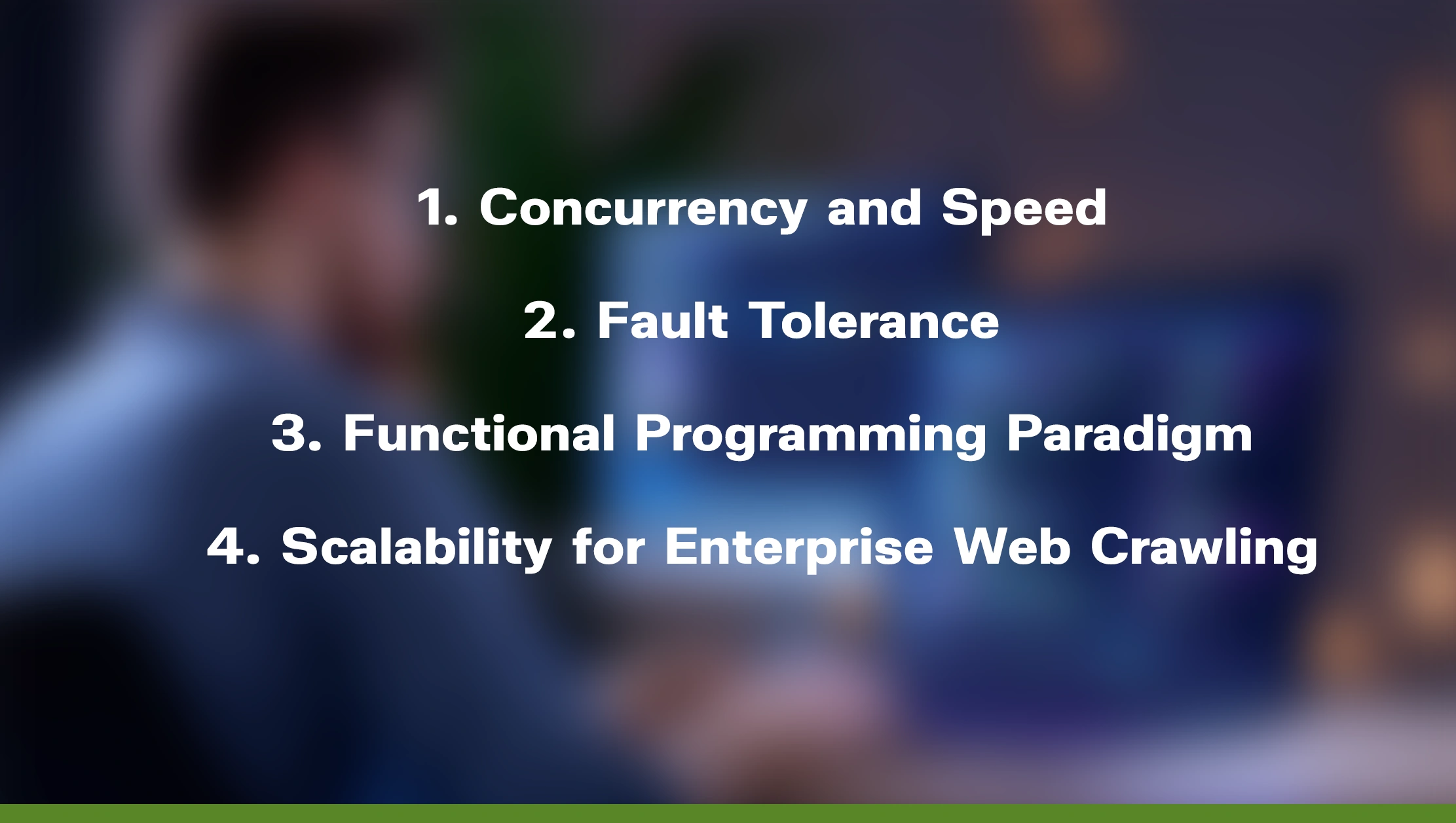

Why Choose Elixir for Web Scraping?

Before diving into the technical details, let’s explore why Elixir is a strong candidate for scraping projects:

1. Concurrency and Speed

Elixir is built on the Erlang VM (BEAM), which is designed for massive concurrency. If you’re scraping thousands of web pages simultaneously, Elixir can handle it efficiently without slowing down.

2. Fault Tolerance

Web scraping often involves unexpected issues like server timeouts, rate limiting, and broken HTML. Elixir’s fault-tolerant design ensures your scrapers remain resilient under such challenges.

3. Functional Programming Paradigm

Functional programming makes it easier to write clean, testable, and maintainable scraping code. Data transformations are often simpler with immutable structures.

4. Scalability for Enterprise Web Crawling

For enterprises that need large-scale data extraction, Elixir shines. You can design distributed crawlers capable of handling millions of requests with Enterprise Web Crawling Services.

Core Libraries and Tools for Web Scraping in Elixir

Unlike Python (with BeautifulSoup, Scrapy) or JavaScript (with Puppeteer, Cheerio), Elixir has its own ecosystem for scraping:

- HTTPoison – A popular HTTP client for making GET/POST requests.

- Floki – An HTML parser (similar to BeautifulSoup in Python).

- Crawly – A fully featured crawling framework for Elixir (similar to Scrapy).

- Tesla – A flexible HTTP client with middleware support.

- Wallaby / Hound – For headless browser automation when dealing with JavaScript-heavy sites.

By combining these tools, you can build powerful scrapers that rival those made in other programming languages.

Step-by-Step Guide to Web Scraping with Elixir

Now let’s go through a practical example of scraping a website using Elixir.

1. Setup Elixir Project

First, create a new Elixir project:

mix new web_scraper

cd web_scraperAdd dependencies to mix.exs:

defp deps do

[

{:httpoison, "~> 1.8"},

{:floki, "~> 0.34"}

]

endInstall dependencies:

mix deps.get2. Fetch Web Page Content

Using HTTPoison to send requests:

defmodule WebScraper do

def fetch_page(url) do

case HTTPoison.get(url) do

{:ok, %HTTPoison.Response{status_code: 200, body: body}} ->

{:ok, body}

{:ok, %HTTPoison.Response{status_code: status}} ->

{:error, "Failed with status: #{status}"}

{:error, %HTTPoison.Error{reason: reason}} ->

{:error, reason}

end

end

end3. Parse HTML Content with Floki

Extract specific data (e.g., article titles):

defmodule Parser do

def extract_titles(html) do

html

|> Floki.parse_document!()

|> Floki.find("h2.title")

|> Floki.text(sep: ", ")

end

end4. Combine Fetching and Parsing

defmodule Scraper do

def run(url) do

case WebScraper.fetch_page(url) do

{:ok, body} ->

titles = Parser.extract_titles(body)

IO.puts("Extracted Titles: #{titles}")

{:error, reason} ->

IO.puts("Error: #{reason}")

end

end

endRun the scraper:

Scraper.run("https://example.com/blog")5. Scaling with Crawly

If you need enterprise-grade scraping, Crawly provides:

- Distributed crawling support

- Data pipelines (cleaning, storing)

- Configurable middlewares

- Integration with databases and queues

Example (basic Crawly crawler):

defmodule ExampleCrawler do

use Crawly.Spider

@impl Crawly.Spider

def base_url(), do: "https://example.com"

@impl Crawly.Spider

def init(), do: [start_urls: ["https://example.com"]]

@impl Crawly.Spider

def parse_item(response) do

{:ok, document} = Floki.parse_document(response.body)

titles = Floki.find(document, "h2.title") |> Floki.text()

%{title: titles}

end

endHandling JavaScript-heavy Websites

Some websites load content dynamically via JavaScript. Elixir alone may not be enough here. You can use:

- Wallaby or Hound (browser automation libraries).

- Headless Chrome (via Puppeteer) triggered from Elixir.

Or rely on Web Scraping API solutions like RealDataAPI, which automatically handle JavaScript rendering, CAPTCHAs, and proxies.

Data Storage Options in Elixir Scraping

Collected data must be stored efficiently:

- PostgreSQL/MySQL for structured storage.

- MongoDB for semi-structured JSON data.

- CSV/Excel files for simple exports.

- Elasticsearch for search-friendly datasets.

Crawly integrates well with these databases, making it perfect for Enterprise Web Crawling Services.

Best Practices for Web Scraping in Elixir

- Respect robots.txt – Always check if scraping is allowed.

- Use request throttling – Avoid overwhelming target servers.

- Rotate proxies and user-agents – Prevent IP bans.

- Implement retries – Handle failed requests gracefully.

- Store and clean data – Ensure your data pipeline removes duplicates and errors.

When to Use RealDataAPI for Web Scraping?

While Elixir is powerful, building and maintaining scrapers takes time. For businesses that need reliable, scalable, and real-time data extraction, APIs like RealDataAPI offer a faster solution.

Benefits of Using RealDataAPI:

- Pre-built scrapers for eCommerce, travel, real estate, jobs, and more.

- Handles CAPTCHAs, proxies, and dynamic rendering.

- Scalable for Enterprise Web Crawling Services.

- Easy integration with any tech stack (Elixir, Python, Java, etc.).

- Real-time delivery of structured data via a Web Scraping API.

This allows you to focus on data analysis and insights rather than infrastructure.

Elixir vs. Other Languages for Web Scraping

| Feature | Elixir | Python | Node.js | Ruby | C# |

|---|---|---|---|---|---|

| Concurrency | Excellent | Moderate | Good | Average | Good |

| Ecosystem (Libraries) | Growing | Extensive | Extensive | Moderate | Good |

| Learning Curve | Steep | Easy | Medium | Medium | Medium |

| Enterprise Scaling | Excellent | Good | Good | Average | Good |

| Fault Tolerance | Excellent | Moderate | Moderate | Low | Moderate |

Elixir excels in high-concurrency enterprise scraping, while Python/Node.js dominate in terms of community support and libraries.

Real-World Applications of Web Scraping with Elixir

E-commerce Price Monitoring – Scrape Amazon, eBay, and Walmart for real-time price tracking.

- Travel Aggregation – Collect flight and hotel data for travel apps.

- Job Boards – Extract listings from Indeed, LinkedIn, and niche sites.

- Real Estate Data – Gather property details for investment analysis.

- Market Research – Extract competitor and consumer sentiment data.

For large projects, outsourcing to Web Scraping Services or integrating RealDataAPI ensures accuracy, speed, and scalability.

Conclusion

Elixir might not be the first language that comes to mind for web scraping, but its concurrency, scalability, and fault tolerance make it a hidden gem for data-intensive scraping tasks. With tools like HTTPoison, Floki, and Crawly, you can build scrapers that rival those made in Python or JavaScript.

However, for businesses and enterprises that require continuous large-scale data pipelines, relying on Web Scraping Services, Enterprise Web Crawling Services, or a Web Scraping API like RealDataAPI can save significant time and cost.

Whether you’re a developer exploring Elixir for fun or a business looking to scale your data operations, this powerful language offers everything you need to build efficient scrapers and pipelines.