Introduction

In today’s data-driven world, access to information has become a competitive edge for businesses and developers alike. Whether you’re monitoring competitor pricing, extracting product data, or aggregating insights from multiple sources, web scraping is at the heart of it all. While many developers use Python or PHP for scraping tasks, Java stands tall as a robust, scalable, and enterprise-friendly language that can handle large-scale data extraction efficiently. In this guide, we’ll explore everything you need to know about web scraping with Java, from fundamentals and tools to advanced techniques and real-world applications.

We’ll also highlight how businesses can leverage solutions like Web Scraping Services, Enterprise Web Crawling Services, and APIs such as RealDataAPI to scale beyond in-house scrapers.

Why Choose Java for Web Scraping Services?

When it comes to scraping, developers often debate which language is best. Here’s why Java is a strong contender:

Performance and Scalability – Java is known for its multithreading and memory management capabilities, making it ideal for large-scale crawling.

Cross-Platform Support – Java applications run seamlessly on any OS via the JVM.

Robust Libraries and Frameworks – Libraries like Jsoup, HtmlUnit, and Selenium for Java make scraping easier.

Enterprise Adoption – Many companies already use Java in their tech stack, so extending into scraping is seamless.

Integration Power – Java works well with databases, APIs, and enterprise-level applications.

If you’re working in an environment where reliability and large-scale crawling are necessary, Java web scraping may be the best fit.

Getting Started: Basics of Web Scraping in Java

Before we dive into tools and advanced methods, let’s cover the basics.

Step 1: Understand the Legal and Ethical Boundaries

Web scraping should always respect:

- Robots.txt rules of websites.

- Terms of Service (TOS).

- Ethical boundaries to avoid overloading servers.

If you want to bypass these challenges, you can rely on professional Web Scraping Services that handle compliance, IP rotation, and scaling for you.

Step 2: Setting Up Your Java Environment

- Install Java JDK (17 or latest).

- Set up an IDE like IntelliJ IDEA or Eclipse.

- Add required dependencies (via Maven or Gradle) for scraping libraries.

Example (Maven dependency for Jsoup):

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.15.3</version>

</dependency>

Step 3: Fetching and Parsing HTML with Jsoup

Jsoup is the most popular Java library for scraping and HTML parsing.

Example: Scraping titles from a news website

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

public class JsoupExample {

public static void main(String[] args) {

try {

Document doc = Jsoup.connect("https://example.com/news").get();

Elements titles = doc.select("h2.article-title");

for (Element title : titles) {

System.out.println(title.text());

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

Output:

Breaking News: Market Updates

Tech Giants Release New Products

Global Economy Insights

This simple example shows how quickly Java can extract structured data.

Advanced Java Web Scraping Tools and Frameworks

1. Jsoup – Best for static HTML parsing

- Lightweight and easy to use.

- Supports DOM traversal, CSS selectors, and data extraction.

- Great for blogs, news websites, and eCommerce product pages.

2. HtmlUnit – Headless browser for Java

- Simulates a browser without rendering UI.

- Handles JavaScript-heavy pages better than Jsoup.

3. Selenium for Java – Best for dynamic content

- Automates browsers like Chrome or Firefox.

- Can click buttons, fill forms, and scrape JavaScript-rendered data.

4. Apache HttpClient – For advanced HTTP requests

- Allows handling headers, cookies, and sessions.

- Useful for APIs and login-based scraping.

5. Crawler4j – Enterprise-level web crawler

- Built for large-scale scraping and crawling.

- Multithreaded crawling for enterprise data needs.

For businesses, Enterprise Web Crawling Services often combine these tools into scalable, managed solutions with built-in IP rotation and anti-blocking mechanisms.

Handling Dynamic Websites with Java

Modern websites rely heavily on JavaScript frameworks like React, Angular, and Vue.js. Traditional HTML parsers (like Jsoup) won’t work for such cases.

Solution 1: Selenium with WebDriver

Example of scraping dynamically loaded content:

import org.openqa.selenium.By;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.chrome.ChromeDriver;

public class SeleniumExample {

public static void main(String[] args) {

System.setProperty("webdriver.chrome.driver", "path/to/chromedriver");

WebDriver driver = new ChromeDriver();

driver.get("https://example.com/products");

WebElement element = driver.findElement(By.className("product-title"));

System.out.println(element.getText());

driver.quit();

}

}

Solution 2: Headless Browsers

Use Chrome Headless mode for faster scraping without rendering UI.

Solution 3: Hybrid Approach

- Fetch static data with Jsoup and only use Selenium for dynamic parts.

- Managing Large-Scale Scraping in Java

When you move from scraping a single page to thousands, scaling challenges appear:

- Rate Limiting & Throttling – Add delays to avoid IP bans.

- Proxy & IP Rotation – Essential for large-scale crawling.

- Data Storage – Store results in databases (MySQL, MongoDB, Elasticsearch).

- Error Handling & Retries – Websites may block or change layout frequently.

This is where Enterprise Web Crawling Services shine. Instead of managing proxies, retries, and scaling yourself, you can rely on providers that offer managed infrastructure.

Using a Web Scraping API Instead of Custom Java Scrapers

Sometimes, instead of writing custom scrapers, businesses opt for APIs. A Web Scraping API abstracts away the complexity of handling proxies, CAPTCHAs, and anti-bot measures.

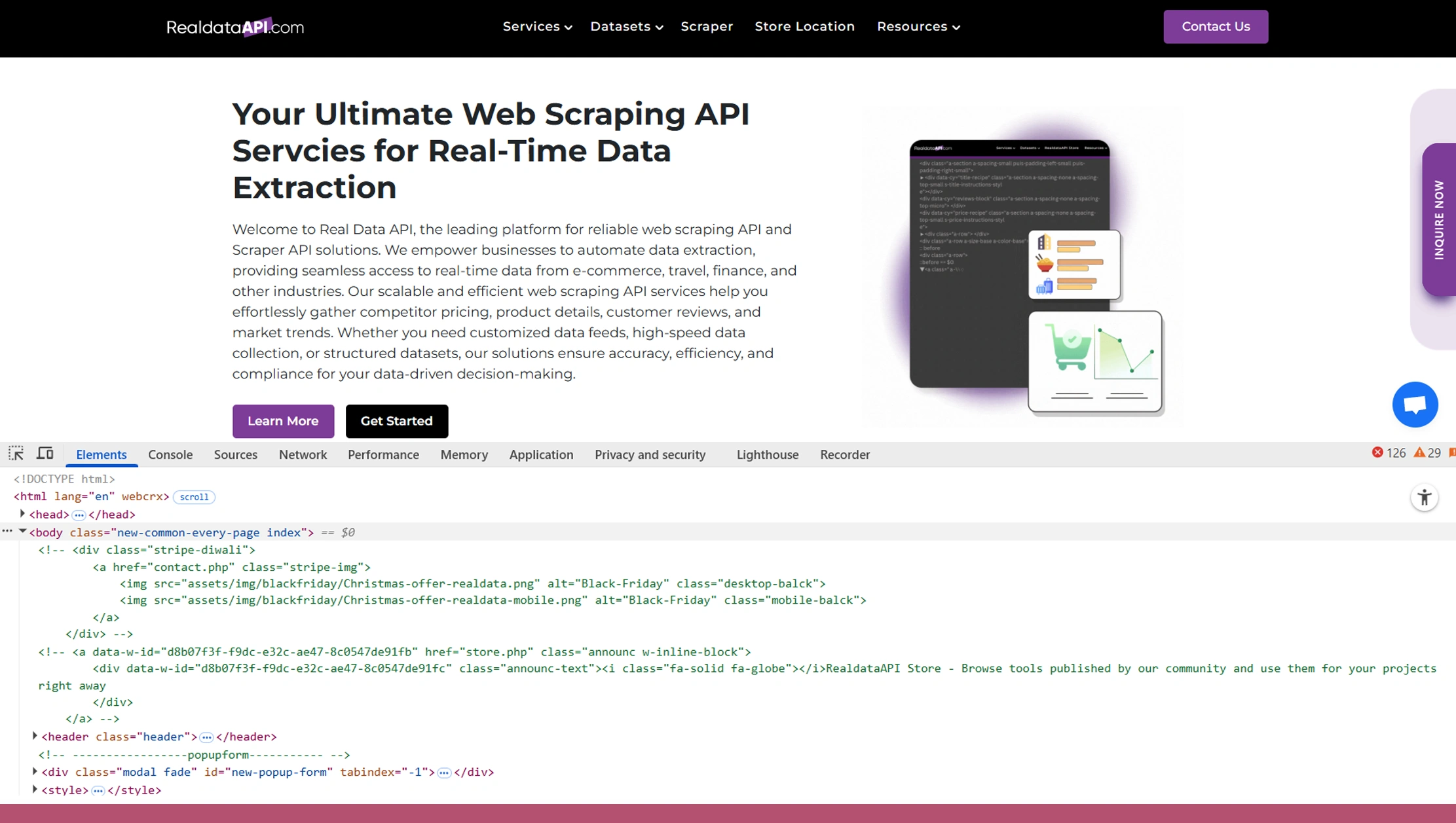

For example, RealDataAPI provides ready-to-use endpoints that fetch clean data from any website. With this, developers can focus on data analysis instead of infrastructure.

Benefits of using a Web Scraping API like RealDataAPI

- Prebuilt anti-blocking mechanisms.

- Scalable infrastructure.

- Faster time-to-market.

- Cost-effective for businesses compared to in-house maintenance.

Sample Java code using API call:

import java.io.BufferedReader;

import java.io.InputStreamReader;

import java.net.HttpURLConnection;

import java.net.URL;

public class APIExample {

public static void main(String[] args) {

try {

String apiUrl = "https://api.realdataapi.com/scrape?url....com";

URL url = new URL(apiUrl);

HttpURLConnection conn = (HttpURLConnection) url.openConnection();

conn.setRequestMethod("GET");

BufferedReader in = new BufferedReader(new InputStreamReader(conn.getInputStream()));

String inputLine;

StringBuffer response = new StringBuffer();

while ((inputLine = in.readLine()) != null) {

response.append(inputLine);

}

in.close();

System.out.println(response.toString());

} catch (Exception e) {

e.printStackTrace();

}

}

}

This simple code shows how RealDataAPI eliminates the hassle of building and maintaining scrapers.

Real-World Use Cases of Web Scraping with Java

E-commerce Price Monitoring

- Extract competitor product prices daily.

- Use Java + Jsoup or an API for real-time updates.

Job Listings Aggregation

- Scrape multiple job portals.

- Feed structured job listings into an application.

Market Research

- Crawl reviews, ratings, and feedback from online platforms.

- Businesses use Web Scraping Services for deeper analysis.

Finance and Investment

- Scrape stock data, news, and financial reports.

Travel Aggregators

- Extract flight, hotel, and booking data for real-time comparisons.

For enterprise-scale scenarios, Enterprise Web Crawling Services are often more cost-effective and reliable.

Challenges in Java Web Scraping

While Java is powerful, scraping does present challenges:

- IP Blocking – Websites may block repeated requests.

- CAPTCHAs – Hard to bypass without automation tools.

- Frequent Layout Changes – Websites update HTML often.

- Scalability Costs – Maintaining servers, proxies, and scrapers can be expensive.

This is why many businesses integrate Web Scraping API solutions like RealDataAPI into their workflows to overcome these hurdles.

Best Practices for Web Scraping with Java

- Always respect robots.txt and site policies.

- Use User-Agent rotation to mimic browsers.

- Add delays to prevent server overload.

- Validate and clean extracted data.

- Prefer using Web Scraping Services for compliance and scale.

Conclusion

Java web scraping is a powerful solution for businesses and developers looking to extract structured data from the web. With libraries like Jsoup, Selenium, HtmlUnit, and Crawler4j, developers can build robust scrapers capable of handling static and dynamic sites.

However, when scaling becomes complex, managed solutions like Enterprise Web Crawling Services or a Web Scraping API such as RealDataAPI provide the necessary infrastructure and reliability.

Whether you’re a developer experimenting with Java scrapers or a business seeking Web Scraping Services, this guide gives you a strong foundation to move forward.

By mastering Java scraping techniques and leveraging APIs, you can unlock the full potential of data for competitive advantage.