Introduction

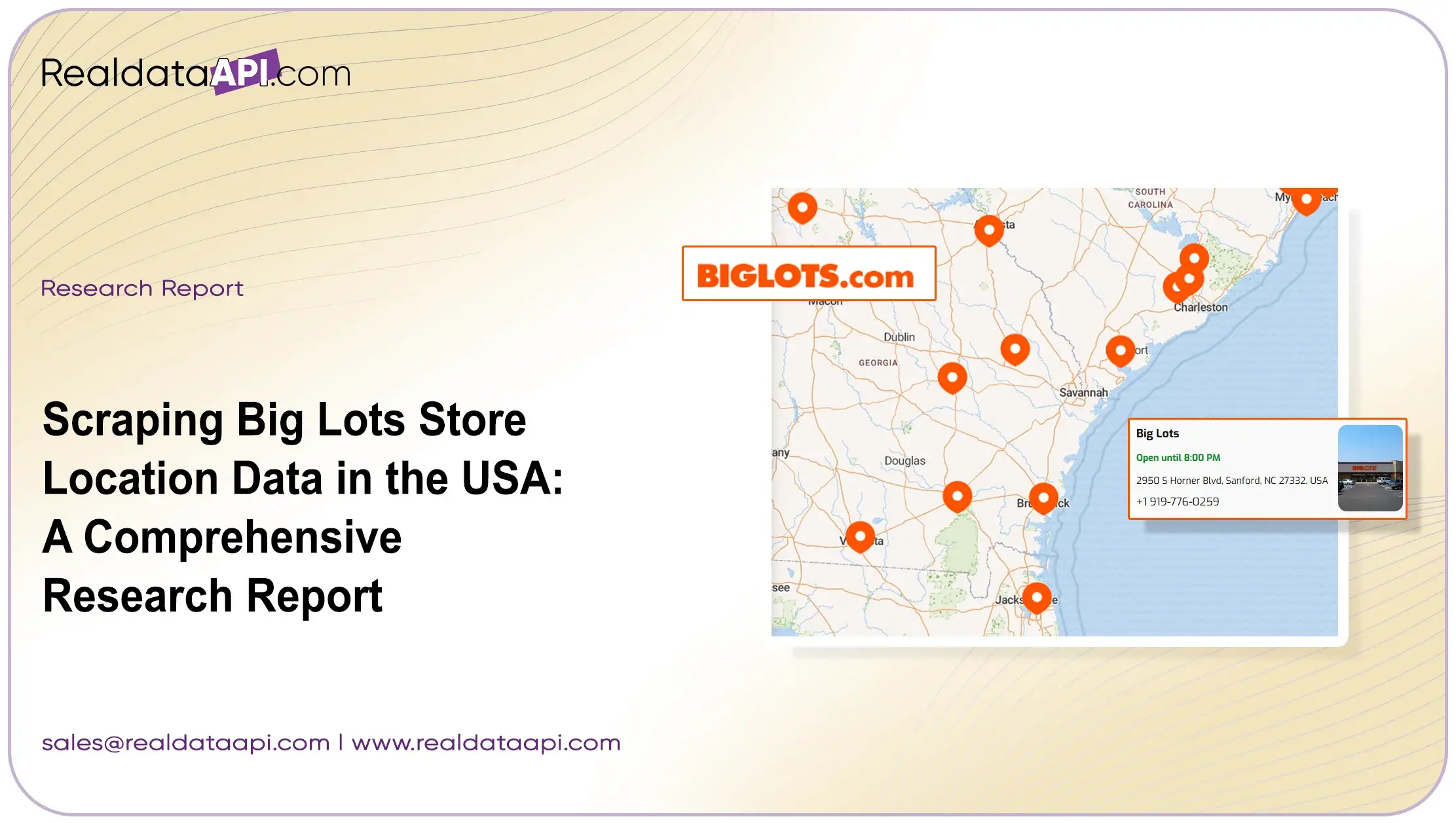

Big Lots, a leading retail chain in the United States, is renowned for offering a wide variety of products ranging from home goods and furniture to groceries and seasonal items. With hundreds of stores spread across multiple states, Big Lots represents a significant retail footprint, making its store location data invaluable for businesses, analysts, and market researchers. However, manually collecting accurate location information is time-consuming and prone to errors. That's where web scraping services become a game-changer.

This research report explores how scraping Big Lots store locations data in the USA can help businesses gain strategic insights, optimize market planning, and enhance competitive analysis.

Understanding the Importance of Retail Location Data

Store location data is crucial for modern businesses seeking a competitive edge. By analyzing Big Lots' retail presence, organizations can:

- Identify high-density markets and underserved areas.

- Study regional consumer behavior and purchasing trends.

- Benchmark against competitors such as Walmart, Target, and Dollar General.

For retail analysts, supply chain managers, and real estate developers, access to accurate store location data facilitates informed decisions on expansion, logistics, and market strategy. By integrating this data through an Ecommerce Scraping API, businesses can automate updates and incorporate the information seamlessly into internal systems.

The Role of Web Scraping in Data Collection

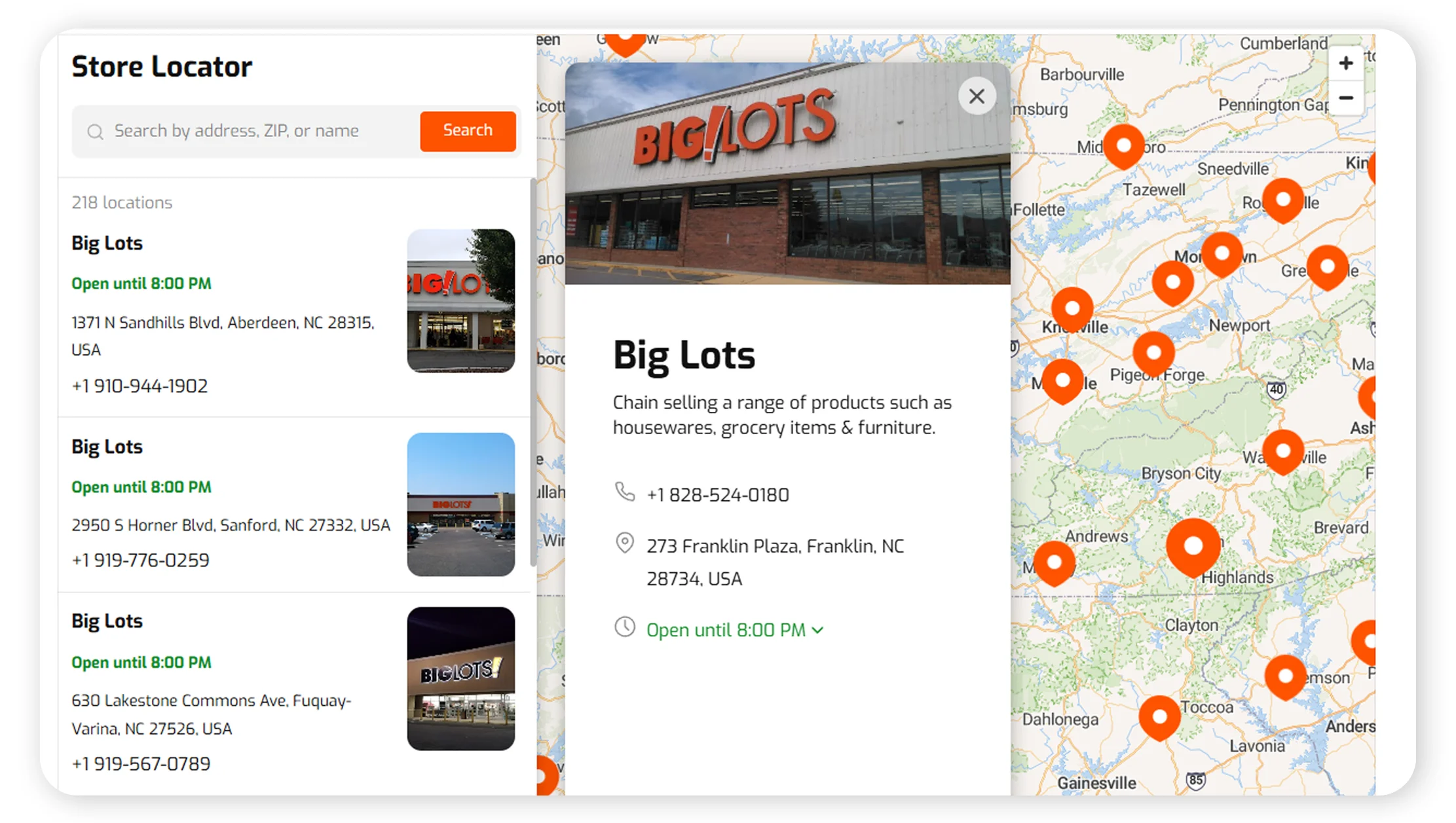

Web scraping is the process of extracting structured data from websites using automated scripts or APIs. Scraping Big Lots store locations involves gathering details such as store names, addresses, contact information, operating hours, and geocoordinates from official sources or trusted directories.

Unlike manual collection, web scraping enables large-scale extraction in minutes, ensuring data accuracy, completeness, and easy integration into analytics systems. Automated scraping can also be scheduled to refresh data periodically, keeping your dataset current. The output can be structured as E-Commerce Datasets for analytics, reporting, and strategic decision-making.

How Big Lots Location Data Scraping Works

1. Data Source Identification:

The scraper identifies official store locator URLs

or third-party directories listing Big Lots outlets. Each entry typically includes store

address, ZIP code, and geographic coordinates.

2. Crawling and Extraction:

Automated crawlers navigate through store listings and

extract structured information:

- Store Name

- Street Address

- City and State

- ZIP Code

- Latitude and Longitude

- Contact Information

- Operating Hours

3. Data Cleaning and Structuring:

Extracted data is refined to remove duplicates,

correct formatting errors, and fill missing values. The structured dataset is then saved in

formats like CSV, JSON, or database tables.

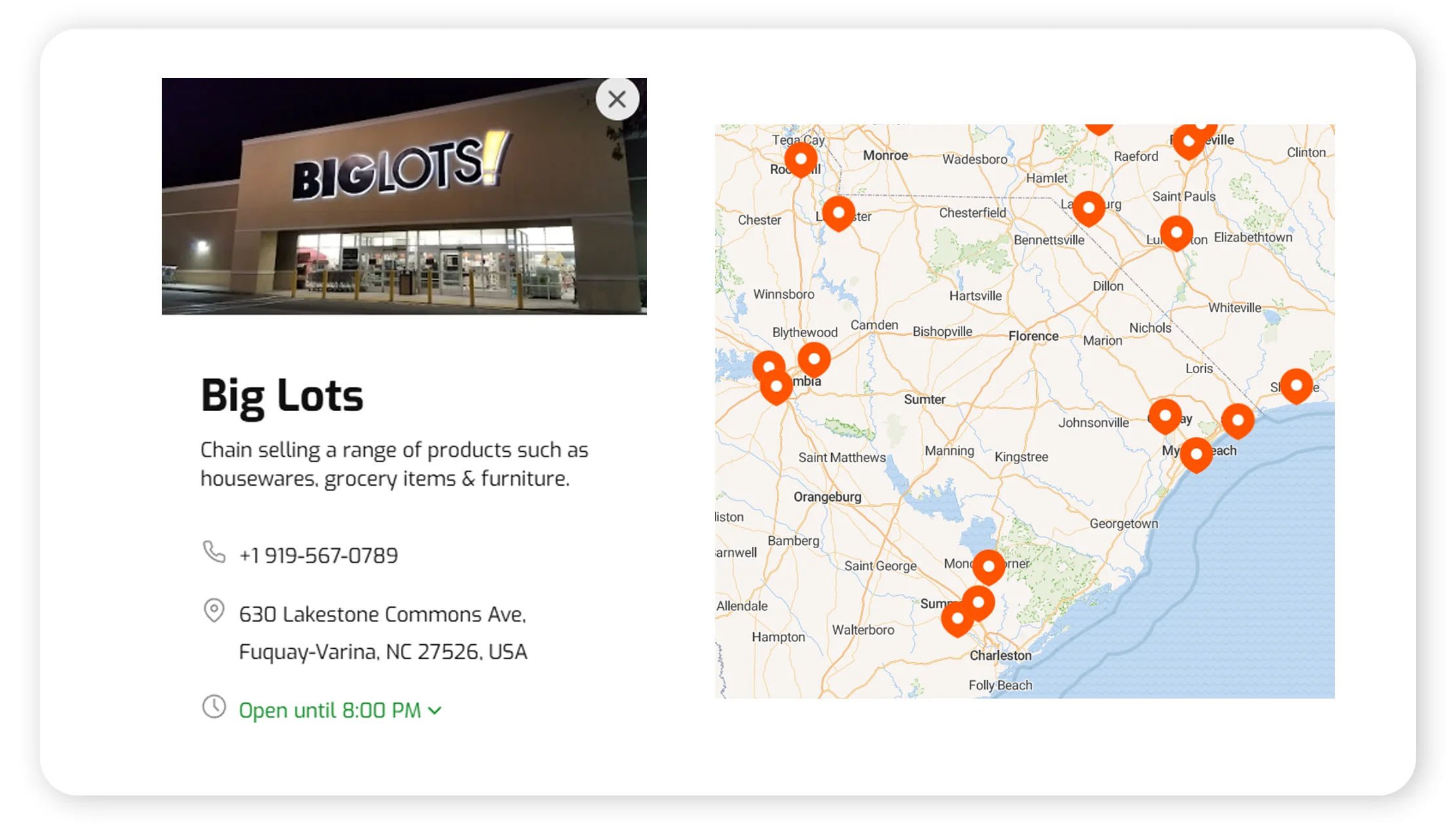

4. Geocoding and Visualization:

Location data is enhanced with geospatial

attributes for mapping in tools such as Tableau, Google Maps API, or GIS platforms to visualize

Big Lots' presence across regions.

Use Cases of Scraping Big Lots Store Data

- Market Expansion Analysis:

Identify high-performing regions and potential areas for new store openings based on existing store density. - Competitor Benchmarking:

Analyze proximity to competitors' stores to optimize market positioning and capture new consumer segments. - Real Estate and Site Selection:

Retail investors and developers can use location density to identify strategic commercial zones for expansion or partnerships. - Logistics and Delivery Optimization:

E-commerce and delivery services can plan efficient routes using outlet geocoordinates to reduce transit time. - Customer Access and Regional Studies:

Combined with demographic data, Big Lots location datasets help assess accessibility, market saturation, and service reach.

Technical and Ethical Considerations

Web scraping should be conducted ethically and within legal boundaries. Key practices include:

- Respecting robots.txt rules and website terms.

- Avoiding personal data extraction.

- Implementing rate-limiting to prevent server overload.

- Crediting data sources where applicable.

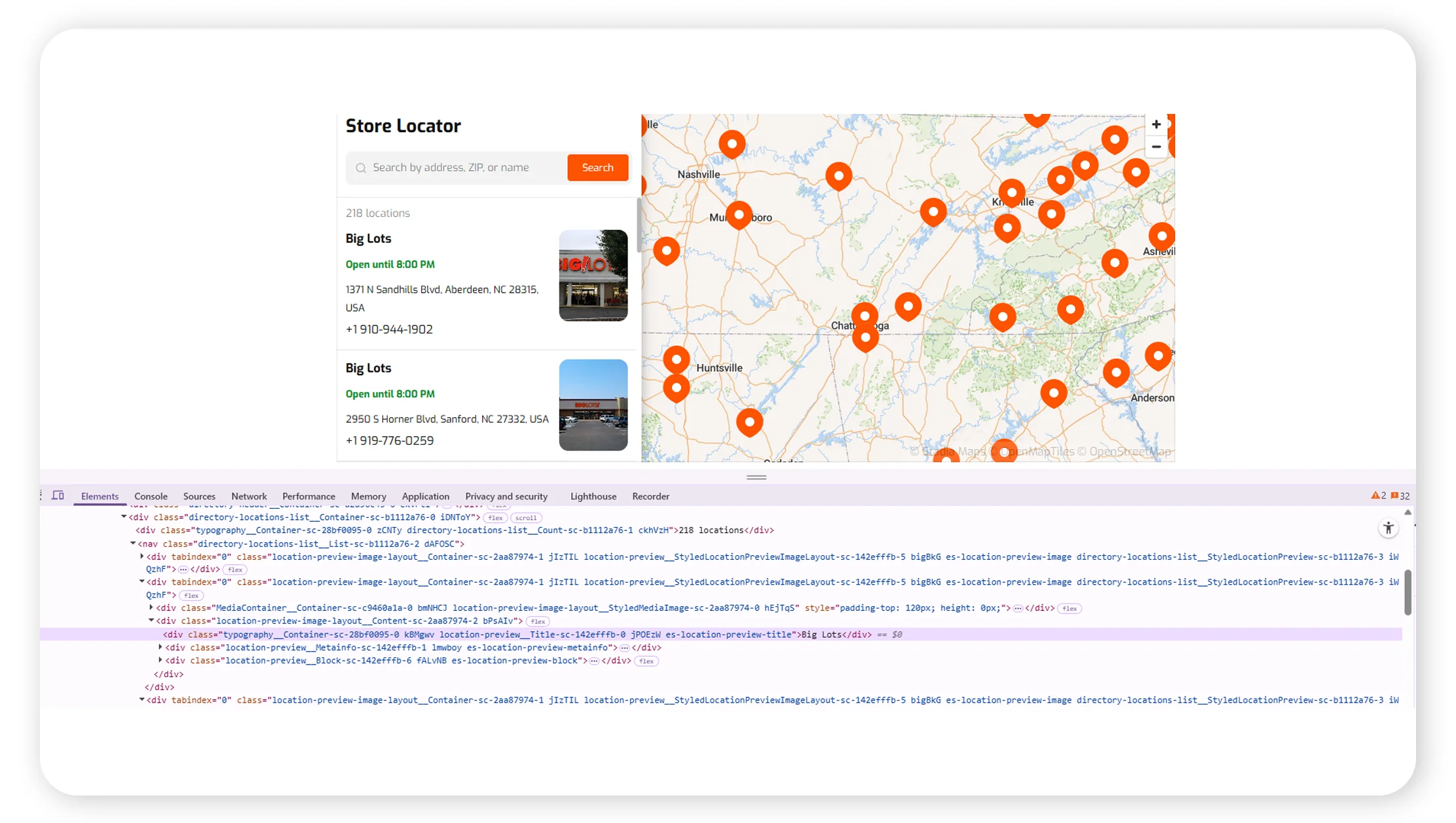

Technically, many store locators use dynamic content loading via JavaScript. Tools such as Selenium, Scrapy, or BeautifulSoup enable scrapers to render pages and extract accurate, complete datasets.

Challenges in Scraping Big Lots Store Locations

- Dynamic Content Loading: Store listings may load asynchronously, requiring specialized scraping techniques.

- Anti-Bot Measures: CAPTCHAs and tokenized sessions can block automated access.

- Data Normalization: Variations in address formats across states require thorough cleaning.

- Geo-Mapping Accuracy: Correct latitude and longitude values are critical for spatial analysis and visualization.

Integrating Scraped Data into Business Intelligence Systems

Once cleaned, Big Lots store data can be used in BI tools such as Power BI, Tableau, or Looker for:

- Heatmaps of store distribution by state or region.

- Dashboards monitoring new store openings or closures.

- Proximity analysis for competitive benchmarking.

By combining location data with demographic or socio-economic datasets, businesses can uncover patterns in consumer reach and market potential.

Real-Time Data Refresh and API Integration

Integrating scraping scripts with APIs allows real-time updates of store data, capturing new openings, relocations, or closures. Applications include:

- Retail analytics dashboards

- Franchise opportunity platforms

- Delivery service optimization

- Geo-targeted marketing campaigns

Advantages of Automated Store Data Extraction

- Efficiency: Extract thousands of records within minutes.

- Scalability: Apply the same scraper to other retail chains.

- Accuracy: Structured extraction reduces human error.

- Updatability: Schedule automated re-scraping for fresh data.

- Integration: Seamlessly feed into mapping or analytics platforms.

Applications Beyond Big Lots

This methodology applies to other retail chains such as Walmart, Target, and Dollar General, enabling comparative market analysis, retail expansion planning, and competitive benchmarking.

Conclusion

Scraping Big Lots store locations data in the USA empowers businesses, analysts, and researchers to make data-driven decisions. From market analysis and competitor benchmarking to logistics optimization and expansion planning, accurate location data is a key asset in the retail sector.

At Real Data API, we provide advanced retail data scraping services, delivering structured, up-to-date datasets for store locations, pricing, and other key metrics. Our solutions enable smarter, faster, and more informed business strategies for retailers, investors, and analysts alike.

View Store Location