Introduction

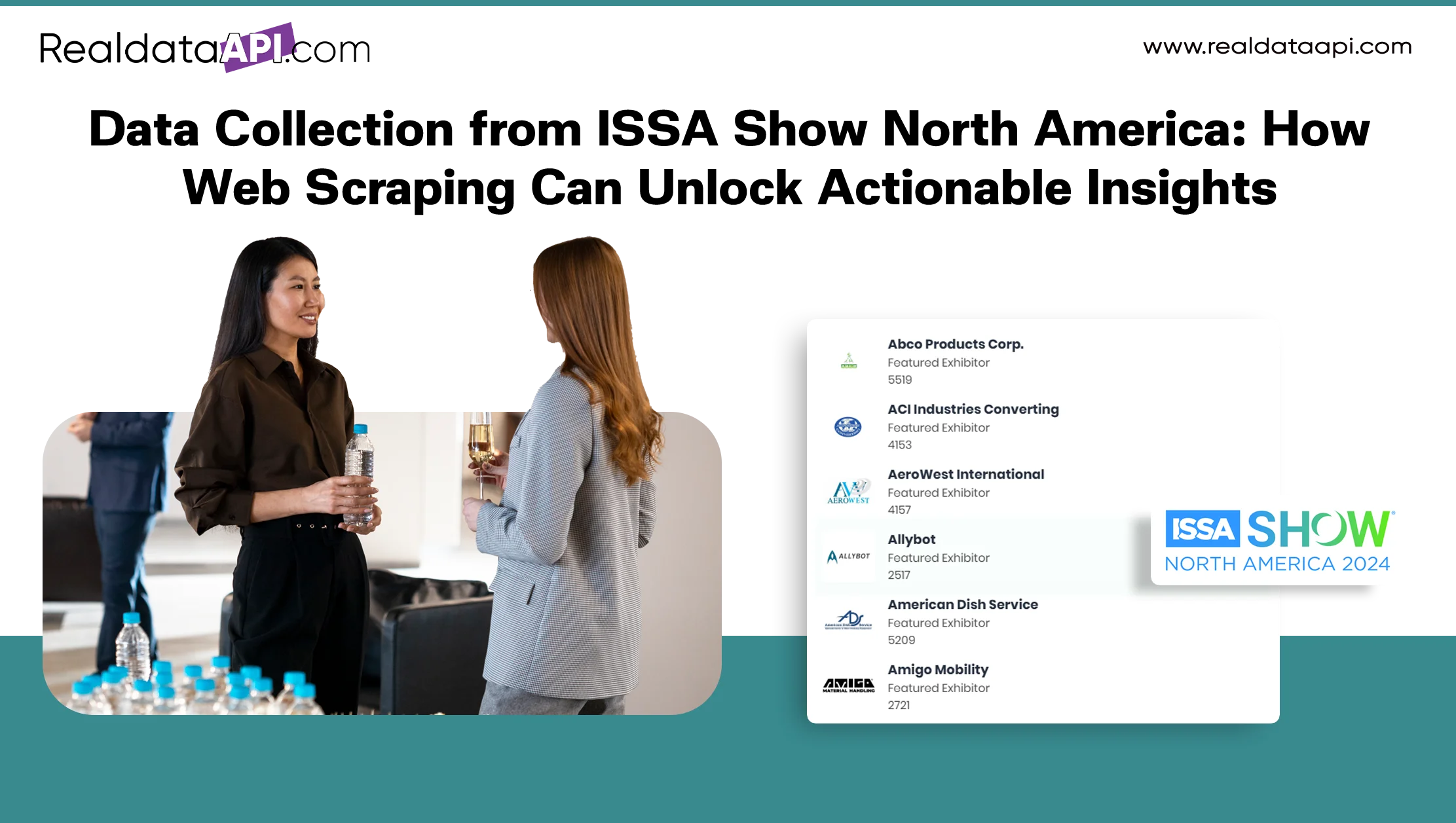

Every year, the ISSA Show North America becomes a focal point for the global cleaning, sanitation, hygiene, and facility management industries. It's more than a trade show—it's a dynamic ecosystem of products, innovations, exhibitors, and business opportunities. Whether you're a supplier, distributor, or analyst, data collection from ISSA Show North America offers valuable insights into market trends, competitor activities, and emerging technologies.

However, manually collecting and analyzing this information can be time-consuming and error-prone. That's where web scraping and data collection automation come in.

This blog explores how businesses can perform Data Collection from ISSA Show North America efficiently using advanced scraping techniques, automation tools, and Web Scraping API such as Real Data API to gather clean, actionable data at scale.

What is the ISSA Show North America?

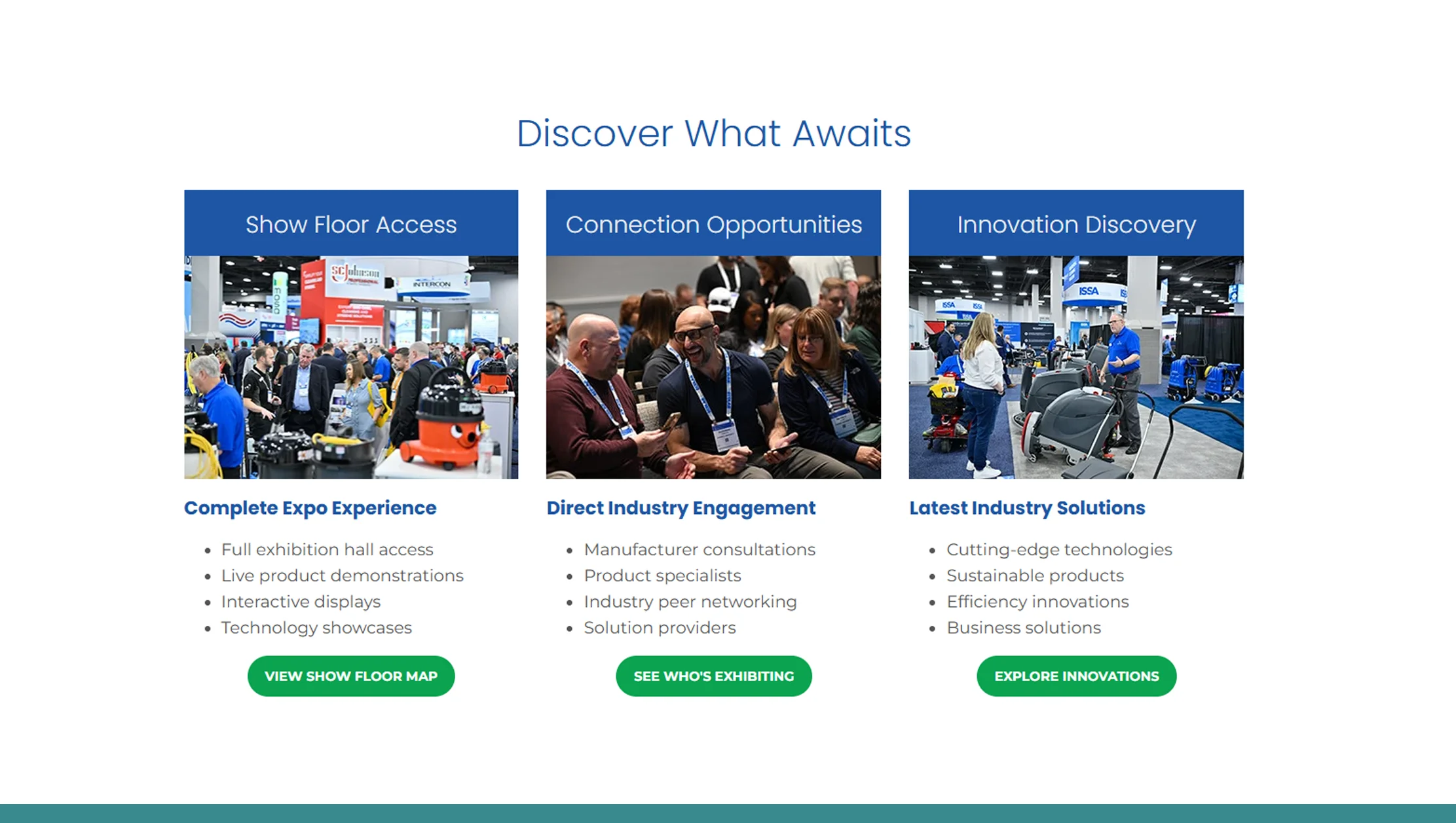

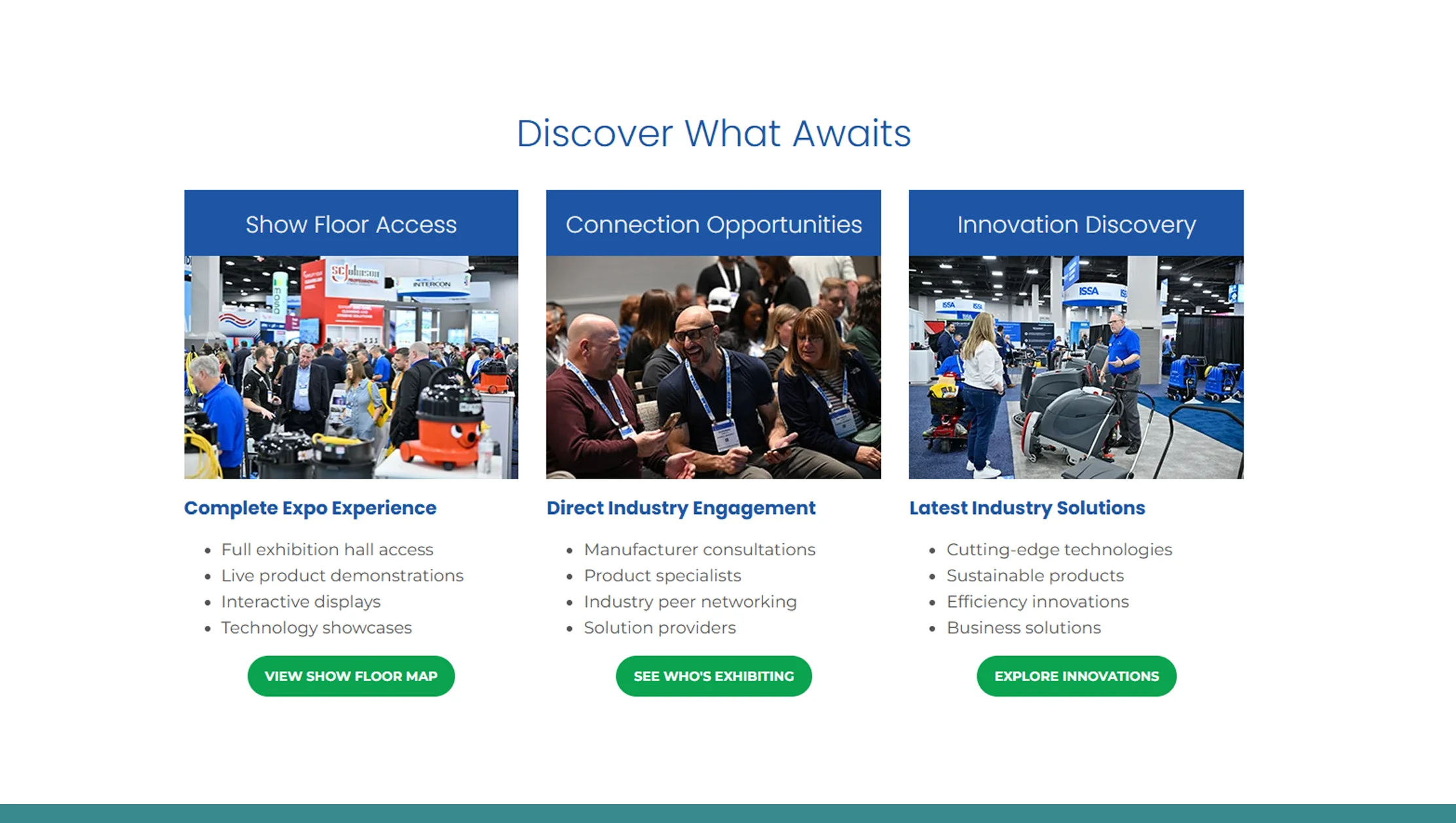

The ISSA Show North America (ISSA Show NA) is the premier annual event for cleaning and facility management professionals. It brings together thousands of exhibitors, brands, and visitors to showcase innovations in cleaning products, sanitation technologies, sustainability solutions, and facility maintenance tools.

The show includes:

- Exhibitor directories with hundreds of company profiles

- Product launches from major cleaning brands

- Seminars and speaker sessions on innovation, sustainability, and best practices

- Networking opportunities for suppliers, distributors, and buyers

Because of this rich diversity, the ISSA Show North America becomes a valuable data source for anyone studying the commercial cleaning market.

Why Collect Data from ISSA Show North America?

Data from the ISSA Show can help businesses make smarter, faster, and more informed decisions. Here's how:

a. Market Intelligence

Scraping exhibitor and product information helps businesses analyze:

- What new cleaning solutions are trending

- Which brands are expanding their presence

- What categories (eco-friendly, AI-driven cleaning, etc.) are dominating

b. Competitive Benchmarking

You can compare your product visibility, booth position, or innovation coverage with that of your competitors to evaluate market standing.

c. Lead Generation

By extracting exhibitor contact information and company details, you can perform Lead Generation to:

- Build a verified B2B contact list

- Target potential distributors or partners

- Identify suppliers offering the raw materials or technologies you need

d. Product Trend Analysis

By scraping product descriptions and launch announcements, companies can detect emerging technologies or sustainability initiatives before they become mainstream.

e. Content and SEO Strategy

Analyzing session topics, speaker bios, and show categories helps content marketers identify trending themes for blogs, videos, and campaigns.

Types of Data Available at ISSA Show North America

When you scrape the ISSA Show data, you can capture multiple layers of structured information:

| Category | Data Fields | Use Case |

|---|---|---|

| Exhibitors | Company name, booth number, category, contact info, website, location | B2B lead generation, market mapping |

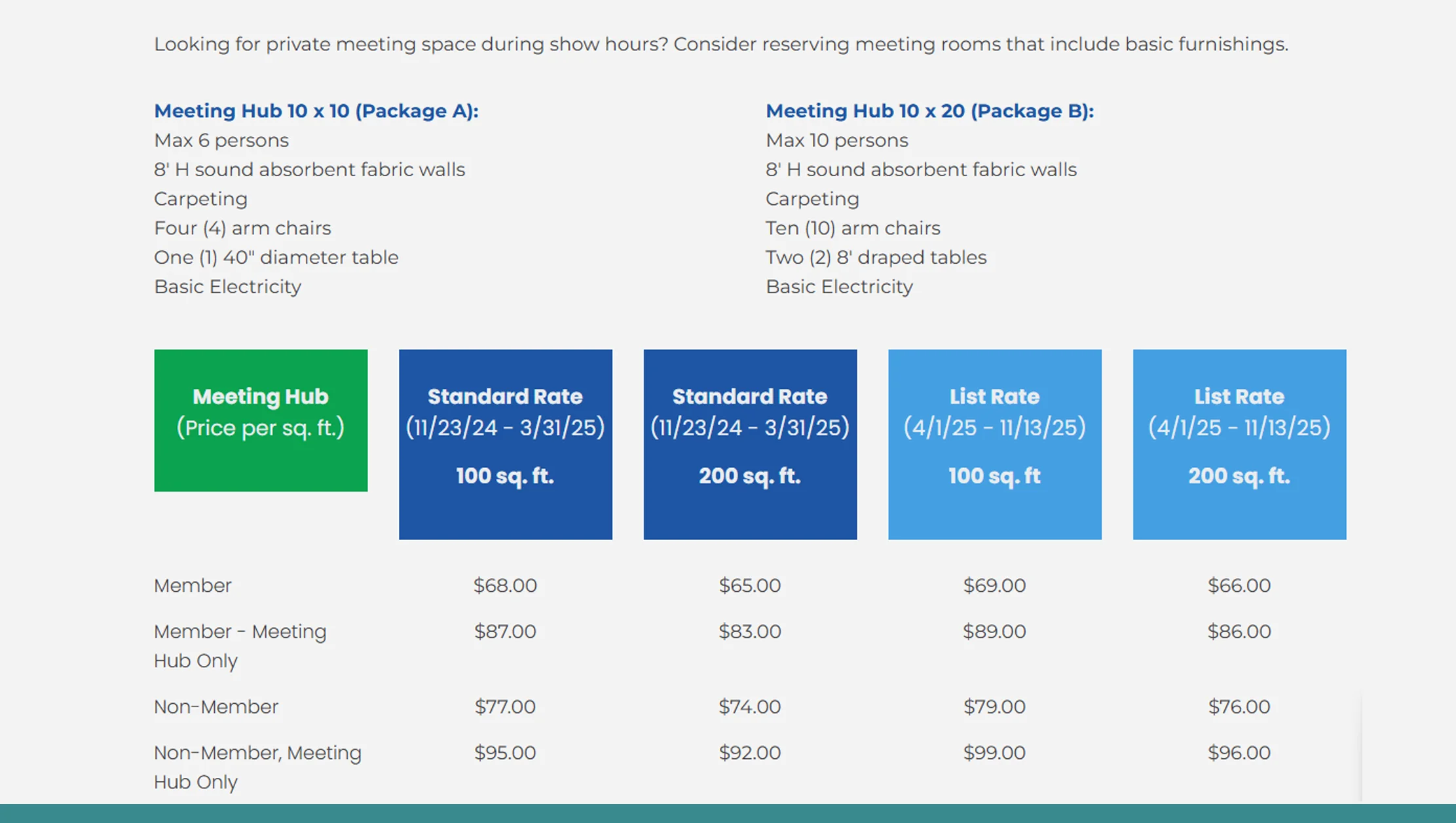

| Products | Product name, description, technical details, price range, image, category | Product analysis, pricing insights |

| Speakers | Name, designation, company, session title, topic | Networking, influencer collaboration |

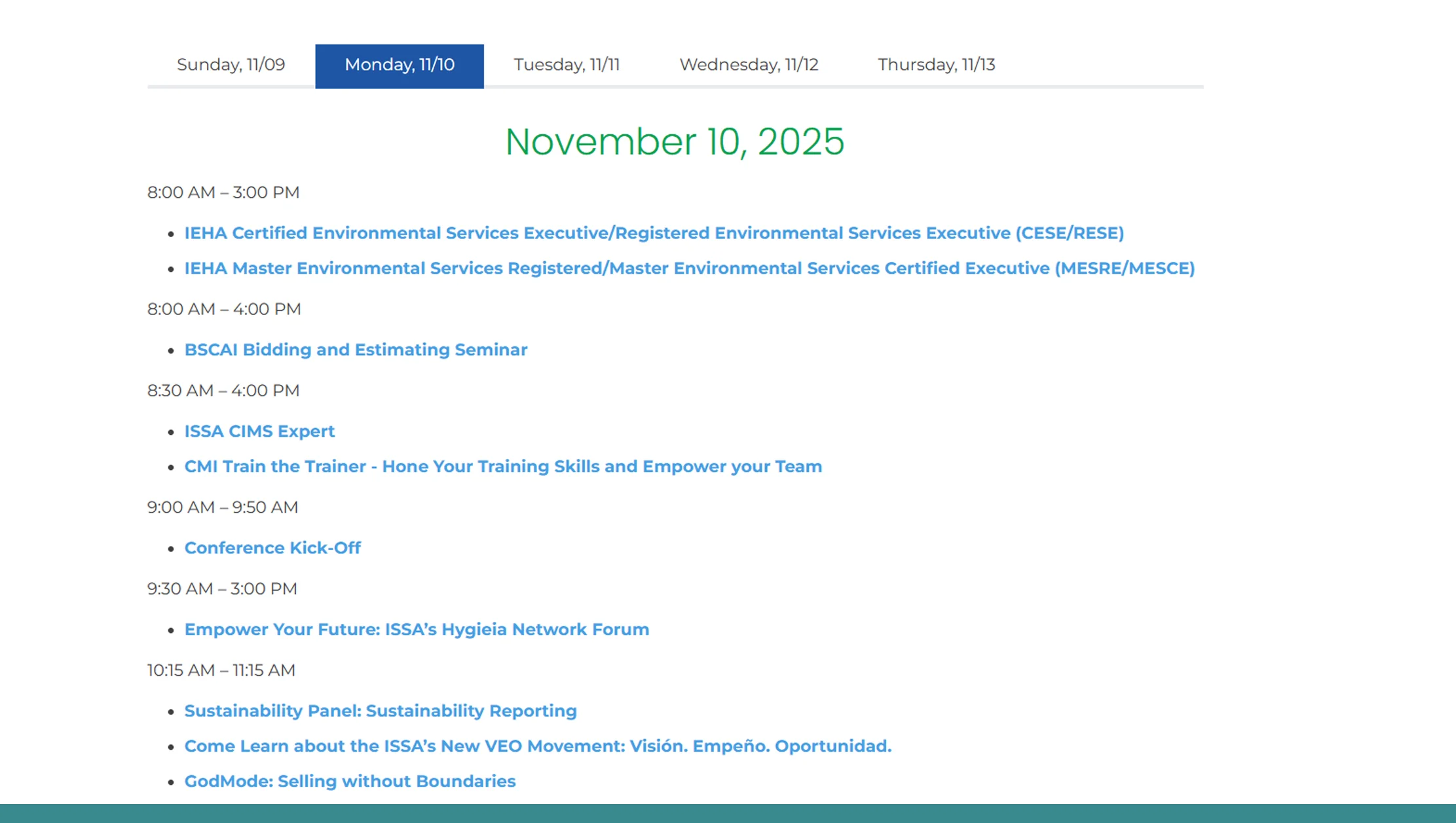

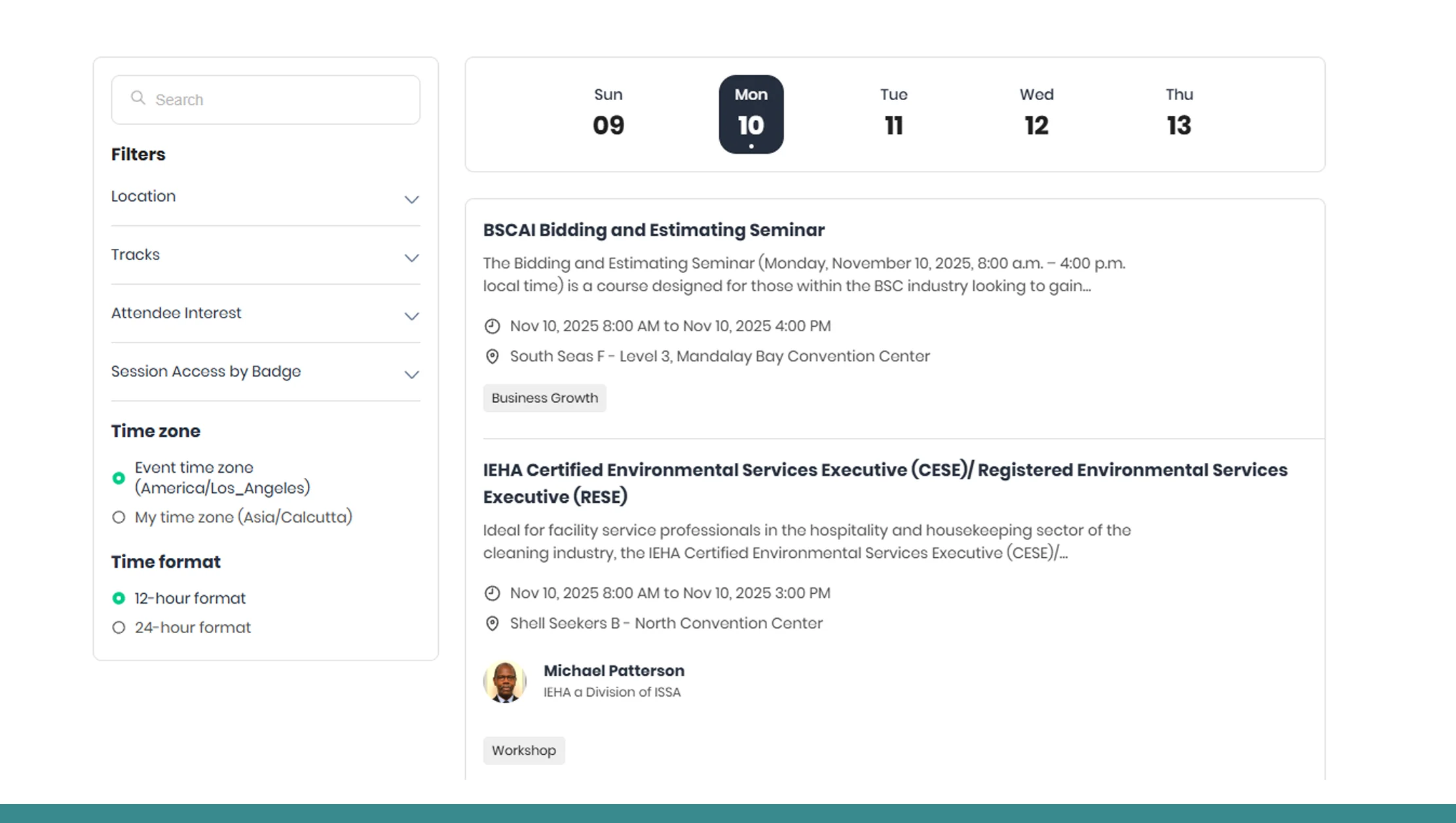

| Sessions | Title, date, time, track, keywords | Content planning, trend monitoring |

| Floor Map | Booth coordinates, exhibitor adjacency | Competitor proximity mapping |

| Press Releases | Launch news, new products | Competitive monitoring |

All this data, when structured and analyzed, provides 360-degree visibility of the event landscape.

Sources for Data Collection

To perform Data Collection from ISSA Show North America, you can target the following data sources:

1. Official ISSA Show Website

- The main exhibitor list and event schedule are often available in structured formats.

2. Event Subdomain (MapYourShow or ExpoCad)

- Many trade events use sub-platforms that store exhibitor and product information in JSON or XML format.

3. Vendor Websites

- Linked exhibitor pages contain richer data like product catalogs and media kits.

4. Industry Publications and Blogs

- Sources like Cleaning & Maintenance Management often feature post-event data summaries.

5. Social Media Channels

- Event hashtags (#ISSAShowNA, #CleaningIndustry, etc.) reveal brand buzz, popular booths, and attendee feedback.

6. Press Releases and Newsrooms

- Great for identifying announcements, partnerships, and product launches.

Legal and Ethical Guidelines for Data Collection

Before starting Web Scraping ISSA Show North America, always ensure compliance during Enterprise Web Crawling and ethical data usage:

- Follow the Robots.txt file on the ISSA Show site to understand crawl permissions.

- Avoid scraping private or personal data like personal emails of attendees.

- Respect intellectual property rights for logos, images, and content.

- Throttle your scraper (set delays, avoid flooding servers).

- Use data for analysis and insights, not unsolicited marketing without consent.

Remember, ethical scraping not only protects your company but also builds long-term credibility.

Web Scraping ISSA Show North America: Step-by-Step Process

Step 1: Identify the Target URLs

Start by mapping URLs such as:

- https://www.issashow.com/exhibitors

- https://www.issashow.com/products

- https://www.issashow.com/sessions

Step 2: Analyze the Web Structure

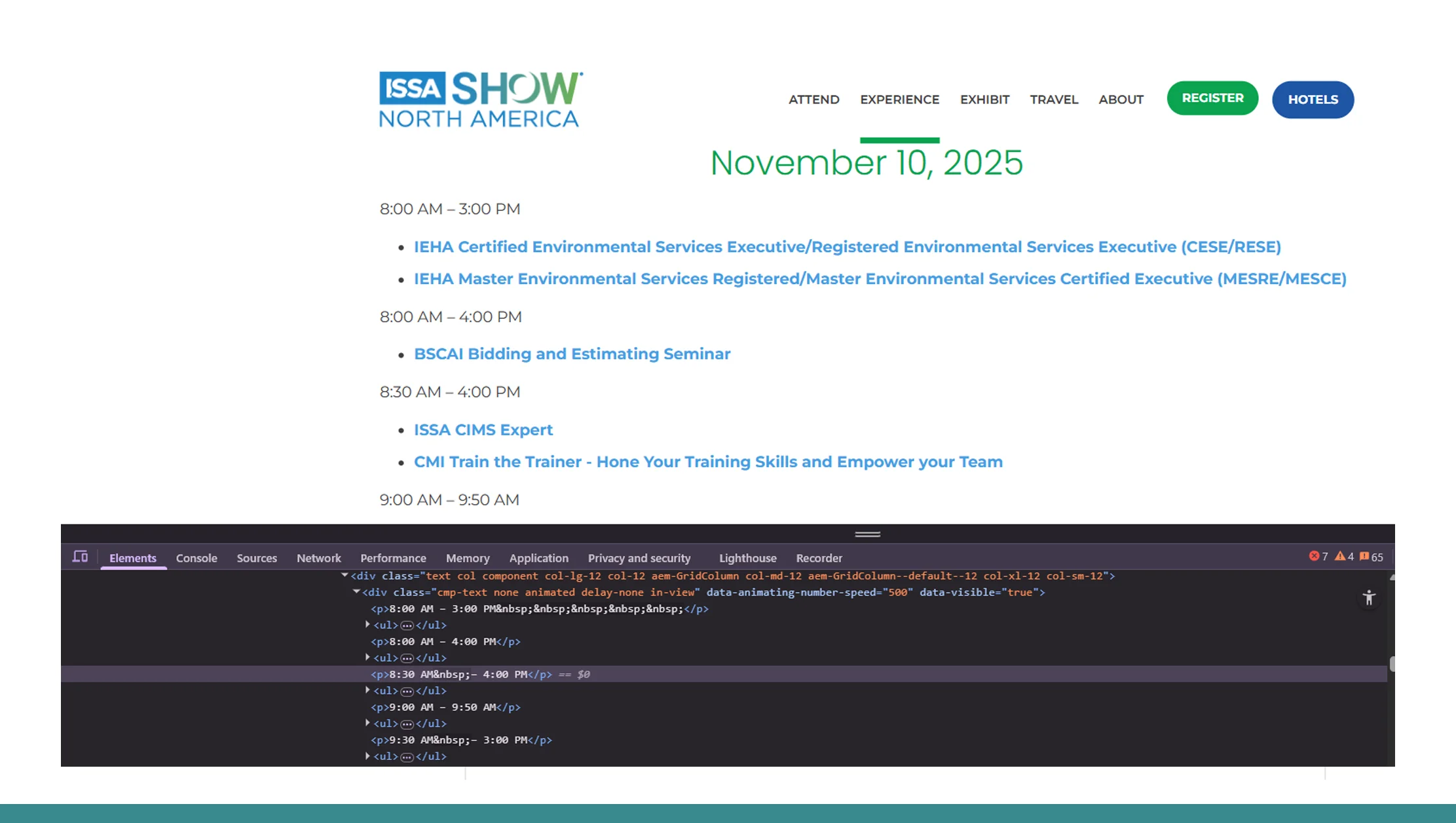

Use browser tools (Inspect → Network Tab) to find JSON endpoints or hidden APIs that serve structured data behind the exhibitor directory or schedule.

Step 3: Choose Your Scraping Stack

For static pages: → Use Python (Requests, BeautifulSoup) or Node.js (Axios, Cheerio).

For dynamic content: → Use Playwright or Puppeteer for automated browsing and JavaScript rendering.

Step 4: Extract and Parse Data

Once the pages are loaded, extract:

- Text fields (company names, products)

- Links (contact pages, product pages)

- Media assets (logos, brochures)

- JSON values (structured exhibitor data)

Step 5: Clean and Normalize Data

Use Pandas or Power BI to:

- Remove duplicates

- Standardize company names

- Validate URLs and emails

Step 6: Store and Use Data

Load cleaned data into:

- SQL/NoSQL Databases (for structured analysis)

- CSV/JSON (for exporting to BI tools)

- CRM Systems (for lead enrichment)

Example Scraper Logic (Conceptual Flow)

1. Request exhibitor list from https://issashow.com/api/exhibitors?page=1

2. For each exhibitor:

- Collect name, booth_number, category, website, description

- Visit exhibitor detail endpoint for extended data

3. Scrape product listings & session schedules

4. Extract speaker bios & company details

5. Save data to a local JSON/CSV file

6. Clean and deduplicate company names and product entries

7. Push cleaned dataset into a BI dashboard or CRMData Cleaning and Enrichment

After scraping, focus on data quality. Cleaned data has higher value and usability.

Key Enrichment Steps:

- Validate company URLs and remove dead links.

- Normalize location data using Google Geocoding APIs.

- Categorize products using NLP-based keyword tagging.

- Add firmographics (industry, company size, HQ location) using enrichment APIs.

- Generate insights such as product trends, exhibitor density, or keyword frequency.

Practical Applications of ISSA Show Data

Once collected and structured, ISSA Show data can serve numerous business objectives.

a. Competitive Intelligence

Monitor your competitors' new launches, booth sizes, and marketing language.

b. Partner Discovery

Identify complementary businesses or distributors within your target vertical.

c. Market Segmentation

Classify exhibitors by region, size, or category to refine go-to-market strategies.

d. Trend Analysis

Track how focus areas shift yearly (e.g., sustainability, automation, or chemical innovation).

e. Content Strategy

Find high-interest topics and speakers to inspire blog content, newsletters, or LinkedIn campaigns.

f. Lead Enrichment

Feed exhibitor data into CRMs like HubSpot or Salesforce to target potential clients.

Automation and Scalability

Manual scraping is fine for one-time projects, but large-scale data collection needs automation.

Use:

- Scrapy or Playwright for scheduled scraping jobs

- Airflow for orchestrating daily pipelines

- Proxies to avoid IP blocks

- Cloud-based data storage (AWS S3, BigQuery, or Snowflake) for long-term scalability

By automating, you can keep your ISSA dataset updated daily during event season.

Challenges in Web Scraping ISSA Show North America

| Challenge | Solution |

|---|---|

| Dynamic JavaScript rendering | Use headless browsers like Playwright |

| Rate limiting / IP blocking | Use proxy rotation |

| Changing website structure | Add automated selector validation |

| Data duplication | Implement fuzzy matching during cleanup |

| Legal/ethical boundaries | Follow scraping best practices and TOS |

Example Data Fields Schema

| Field Name | Description |

|---|---|

| company_name | Exhibitor company name |

| booth_number | Assigned booth or stall number |

| category | Type of products/services |

| contact_email | Official company contact |

| website_url | Company or exhibitor site |

| product_name | Displayed product name |

| product_description | Product summary |

| session_title | Education or seminar session name |

| speaker_name | Speaker or panelist |

| date_scraped | Timestamp for record tracking |

Having such a schema ensures data consistency and allows easy integration with Web Scraping Services and analytics tools.

Benefits of Using APIs for ISSA Show Data Collection

While web scraping is powerful, combining it with Real Data APIs Live Crawler Services provides additional reliability and structure. APIs can help:

- Retrieve clean JSON-formatted data directly

- Avoid website structure changes

- Reduce server load and scraping errors

By integrating scraping with API solutions like Real Data API, you can:

- Automate periodic data collection

- Fetch exhibitor updates, product lists, or session data in real time

- Combine ISSA data with other industry datasets (from similar expos or online marketplaces)

Data Collection from ISSA Show North America with Real Data API

Real Data API is a professional-grade data extraction platform designed to simplify large-scale, dynamic data collection from event sites like the ISSA Show.

It provides:

- Custom endpoints for exhibitor and product data

- High-speed extraction with anti-blocking protection

- Real-time updates when exhibitors or schedules change

- Data delivery in structured JSON, CSV, or API formats

Using Real Data API, you can integrate ISSA Show North America data directly into your CRM, BI tools, or analytics dashboards — eliminating the complexity of manual scraping or maintenance.

Conclusion

The ISSA Show North America is more than a trade fair — it's a rich ecosystem of insights waiting to be unlocked. With proper data collection strategies, you can turn exhibitor listings, product details, and session schedules into business intelligence that fuels decision-making, marketing, and innovation.

By leveraging Web Scraping ISSA Show North America and tools like Real Data API, businesses can:

- Collect reliable, structured, and compliant data

- Automate scraping without coding headaches

- Enrich datasets for CRM and analytics use

- Monitor real-time market trends and competitor activity

Whether your goal is Scraping ISSA Show Data, Data Collection from ISSA Show NA, or scaling across other global expos — Real Data API delivers the scalability, precision, and compliance that modern data teams need.